Feature Fields for Robot Manipulation (F3RM) helps machines manipulate unfamiliar objects by allowing robots to interpret open-ended text prompts using natural language. The system’s 3D feature fields can be useful in environments with thousands of objects, such as warehouses. Images are provided by the researchers.

Alex Shipps | Courtesy of MIT CSAIL

Imagine visiting a friend abroad and looking inside the refrigerator to see what would make a great breakfast. At first, many of the items look unfamiliar, each wrapped in unfamiliar packaging and containers. Despite these visual differences, you begin to understand what each item is used for and begin to pick it up as needed.

Inspired by humans’ ability to manipulate unfamiliar objects, a group at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) designed Feature Fields for Robotic Manipulation (F3RM), a system that blends 2D images and basic model features into a 3D scene to help robots identify and understand nearby objects. F3RM can interpret open-ended human language prompts, making the method useful in real-world environments with thousands of objects, such as warehouses and homes.

F3RM helps machines manipulate objects by giving them the ability to interpret open-ended text prompts using natural language. As a result, machines can understand less specific requests from humans and still complete desired tasks. For example, if a user asks a robot to “pick up the tall mug,” the robot can find and grab the item that best matches that description.

“It’s incredibly hard to build a robot that can really generalize in the real world,” said Ge Yang, a postdoc at the National Science Foundation AI Institute for Artificial Intelligence and Fundamental Interactions and MIT CSAIL. “We really want to figure out how to do that, so in this project, we’re trying to push for an aggressive level of generalization from three or four objects to everything we can find at the MIT Stata Center. We wanted to learn how to build a robot that’s as flexible as ourselves, so that it can grab and release objects that it’s never seen before.”

Learn “Where and What Is by Seeing”

This method can help robots pick items in large fulfillment centers, which are inevitably messy and unpredictable. In these warehouses, robots are often given descriptions of the inventory they need to identify. The robots must match the text provided to the target regardless of packaging differences, so that the customer’s order is delivered correctly.

For example, a major online retailer’s fulfillment center may contain millions of items, many of which the robot has never encountered before. To operate at that scale, the robot must understand the geometry and semantics of the various items, some of which are in tight spaces. Using F3RM’s advanced spatial and semantic perception capabilities, the robot can be more effective at finding objects, placing them in boxes, and then sending them for packing. Ultimately, this will help factory workers more efficiently deliver customer orders.

“One of the things that people who use F3RM often find surprising is that the same system can work at room scale and building scale, and that it can be used to build simulation environments for robot learning and large-scale maps,” Yang says. “But before we scale this up any further, we first want to get this system up and running really quickly so that we can use these types of representations for more dynamic robot control tasks, and preferably in real time, so that robots that are tackling more dynamic tasks can use them for perception.”

The MIT team points out that F3RM’s ability to understand a variety of scenes could be useful in urban and home environments. For example, the approach could help personalized robots identify and pick up specific items. The system would help robots understand their surroundings both physically and perceptually.

“Visual perception has been defined by David Marr as the problem of ‘knowing where something is by looking at it,’” says lead author Philippe Isola, an assistant professor of electrical engineering and computer science at MIT and a principal investigator at CSAIL. “Recent rudimentary models have become very good at knowing what they are looking at, can recognize thousands of object categories, and can provide detailed text descriptions of images. At the same time, photopic fields have become very good at representing where things are in a scene. Combining these two approaches allows us to represent where things are in 3D, a combination that our research suggests is particularly useful for robotic tasks that require manipulating objects in 3D.”

Creating a “digital twin”

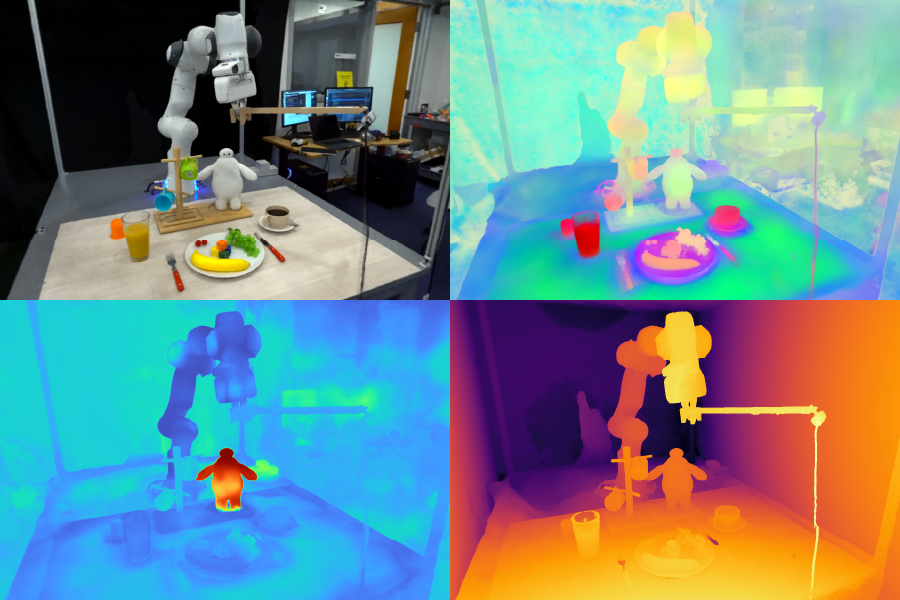

F3RM starts to understand its surroundings by taking pictures with a selfie stick. The camera on board can take 50 images in different poses to build a Neural Radiance Field (NeRF). NeRF is a deep learning method that takes 2D images and constructs a 3D scene. This RGB photo collage creates a “digital twin” of its surroundings, representing its surroundings in 360 degrees.

In addition to the highly detailed neural photometric field, F3RM constructs a feature field that augments geometry with semantic information. The system efficiently learns visual concepts using CLIP, a vision-based model trained on hundreds of millions of images. By reconstructing 2D CLIP features from images taken with a selfie stick, F3RM effectively elevates 2D features into 3D representations.

Keep a job open to the end

After a few demonstrations, the robot applies what it knows about geometry and semantics to grasp objects it has never encountered before. When a user submits a text query, the robot searches the space of possible grasps to identify the one most likely to pick up the object the user has requested. Each potential option is scored based on its relevance to the prompt, its similarity to the demonstrations the robot was trained on, and whether it causes a collision. It then selects the grasp with the highest score and executes it.

To demonstrate the system’s ability to interpret open-ended human requests, the researchers had the robot pick up Baymax, a character from Disney’s “Big Hero 6.” Although F3RM had never been directly trained to pick up a cartoon superhero toy, the robot used the Foundation Model’s spatial awareness and visual language capabilities to determine which object to grab and how to pick it up.

F3RM also allows the user to specify objects that the robot wants to process at different levels of linguistic detail. For example, if there is a metal mug and a glass mug, the user can ask the robot for “glass mug.” If the bot sees two glass mugs, one filled with coffee and the other filled with juice, the user can ask for “glass mug with coffee.” The underlying model features embedded in the feature fields enable this level of open understanding.

“If I show a person how to pick up a mug with their lips, they can easily transfer that knowledge to picking up other geometrically similar objects, like bowls, measuring beakers, or even rolls of tape. It’s very difficult for robots to achieve this level of adaptability,” said co-lead author William Sen, an MIT PhD student, CSAIL affiliate, and the study’s lead author. “F3RM combines geometric understanding with the semantics of underlying models trained on internet-scale data to enable this level of aggressive generalization with only a handful of demonstrations.”

Shen and Yang wrote the paper under Isola’s direction, and co-authors include MIT professor and CSAIL principal investigator Leslie Pack Kaelbling and undergraduates Alan Yu and Jansen Wong. The team received support from Amazon.com Services, the National Science Foundation, the Air Force Office of Scientific Research, the Office of Naval Research’s Multidisciplinary University Initiative, the Army Research Office, the MIT-IBM Watson Lab, and the MIT Quest for Intelligence. Their research will be presented at the 2023 Robot Learning Conference.

MIT News