Low-Rank Adaptation (LoRA) is a new technique for fine-tuning large-scale pretrained models. These models are usually trained on general domain data to retain the maximum amount of data. These models can be further ‘fine-tuned’ or adapted to domain-specific data to achieve better results in tasks such as chat or question answering.

It is possible to initialize a model with pre-trained weights and fine-tune the model simply by further training on domain-specific data. As the size of the pretrained model increases, the entire forward and backward cycle requires a large amount of computing resources. Simply continuing to train and fine-tune requires a complete copy of all parameters for each task/domain to which the model is applied.

LoRA: Low-Rank Adaptation of Large Language Models proposes a solution to both problems using low-rank matrix factorization. Reduce the number of trainable weights by 10,000x and GPU memory requirements by 3x.

method

The problem of fine-tuning a neural network can be expressed as: \(\Delta\Theta\)

to minimize \(L(X, y; \Theta_0 + \Delta\Theta)\) where \(L\) is the loss function, \(X\) and \(why\)

It’s data \(\theta_0\) Weights of the pretrained model.

We learn the parameters \(\Delta\Theta\) dimensionally \(|\Delta \Theta|\)

equivalence \(|\theta_0|\). when \(|\theta_0|\) For very large cases, such as large pre-trained models. \(\Delta\Theta\) It becomes computationally difficult. You also have to learn something new with each task. \(\Delta\Theta\) Parameter sets make it much more difficult to deploy fine-tuned models when there are more than a few specific tasks.

LoRA suggests using approximations. \(\Delta\P\Approximately \Delta\Theta\) with \(|\Delta \P| << |\Delta \Theta|\). Observations show that neural networks have many dense layers that perform matrix multiplications, and during pre-training they typically have global ranks, but when adapting to a specific task, weight updates have low “intrinsic dimensionality”.

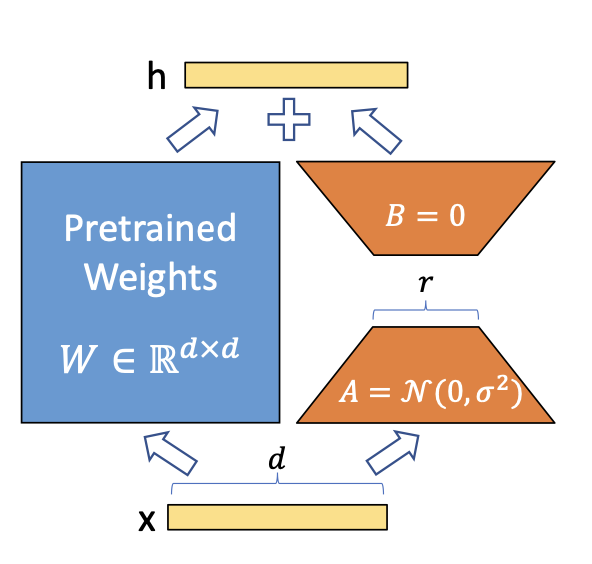

For each weight matrix update, a simple matrix decomposition is applied. \(\Delta\Theta\in \Delta\Theta\). Considering \(\Delta \theta_i \in \mathbb{R}^{d \times k}\) update on \(me\)LoRA approximates the network weights as follows:

\(\Delta\theta_i \approximately \Delta\phi_i = BA\)

where \(B \in \mathbb{R}^{d \times r}\), \(A \in \mathbb{R}^{r \times d}\) and ranking \(r << min(d, k)\). So instead of learning \(d \times k\) Now the parameters we need to learn \((d + k) \times r\) If you consider the multiplication aspect, it’s much smaller. actually, \(\delta\theta_i\) The sizes are as follows: \(\frac{\alpha}{r}\) before it was added \(\theta_i\)This can be interpreted as the ‘learning rate’ for LoRA updates.

LoRA does not increase inference latency. This is because you can simply update the weights once the fine tuning is complete. \(\theta\) By adding each \(\Delta\Theta\Approximately \Delta\Pi\). It also makes it simpler to deploy multiple task-specific models on top of one larger model. \(|\Delta \p|\) is much smaller than \(|\Delta \Theta|\).

Implementing with Torch

Now that you have an idea of how LoRA works, let’s implement it using Torch with minimal issues. Our plan is as follows:

- Simulate the training data using a simple method. \(y = X \theta\) Model. \(\theta \in \mathbb{R}^{1001, 1000}\).

- Train full-rank linear model for estimation \(\theta\) – This will be the ‘pre-trained’ model.

- Apply transformations to simulate different distributions. \(\theta\).

- Train a low-ranking model using pretrained weights.

Let’s start by simulating the training data.

library(torch)

n <- 10000

d_in <- 1001

d_out <- 1000

thetas <- torch_randn(d_in, d_out)

X <- torch_randn(n, d_in)

y <- torch_matmul(X, thetas)Now let’s define the base model.

model <- nn_linear(d_in, d_out, bias = FALSE)We also define functions for training the model to be reused later. This function performs a standard training loop on Torch using the Adam optimizer. Model weights are updated in place.

train <- function(model, X, y, batch_size = 128, epochs = 100) {

opt <- optim_adam(model$parameters)

for (epoch in 1:epochs) {

for(i in seq_len(n/batch_size)) {

idx <- sample.int(n, size = batch_size)

loss <- nnf_mse_loss(model(X(idx,)), y(idx))

with_no_grad({

opt$zero_grad()

loss$backward()

opt$step()

})

}

if (epoch %% 10 == 0) {

with_no_grad({

loss <- nnf_mse_loss(model(X), y)

})

cat("(", epoch, ") Loss:", loss$item(), "\n")

}

}

}Then the model is trained.

train(model, X, y)

#> ( 10 ) Loss: 577.075

#> ( 20 ) Loss: 312.2

#> ( 30 ) Loss: 155.055

#> ( 40 ) Loss: 68.49202

#> ( 50 ) Loss: 25.68243

#> ( 60 ) Loss: 7.620944

#> ( 70 ) Loss: 1.607114

#> ( 80 ) Loss: 0.2077137

#> ( 90 ) Loss: 0.01392935

#> ( 100 ) Loss: 0.0004785107Well, now we have a pre-trained base model. Let’s say you have data with a slightly different distribution that you simulate using:

thetas2 <- thetas + 1

X2 <- torch_randn(n, d_in)

y2 <- torch_matmul(X2, thetas2)Applying the default model to this distribution does not yield good performance.

nnf_mse_loss(model(X2), y2)

#> torch_tensor

#> 992.673

#> ( CPUFloatType{} )( grad_fn = <MseLossBackward0> )We now fine-tune our initial model. The distribution of the new data is slightly different from the initial data. This rotates the data points by adding 1 to every theta. This means that updating the weights is not expected to be complex and a full ranking update is not required to get good results.

Let’s define a new torch module that implements the LoRA logic.

lora_nn_linear <- nn_module(

initialize = function(linear, r = 16, alpha = 1) {

self$linear <- linear

# parameters from the original linear module are 'freezed', so they are not

# tracked by autograd. They are considered just constants.

purrr::walk(self$linear$parameters, \(x) x$requires_grad_(FALSE))

# the low rank parameters that will be trained

self$A <- nn_parameter(torch_randn(linear$in_features, r))

self$B <- nn_parameter(torch_zeros(r, linear$out_feature))

# the scaling constant

self$scaling <- alpha / r

},

forward = function(x) {

# the modified forward, that just adds the result from the base model

# and ABx.

self$linear(x) + torch_matmul(x, torch_matmul(self$A, self$B)*self$scaling)

}

)Now let’s initialize the LoRA model. we will use \(r = 1\), which means A and B are just vectors. The base model has 1001×1000 trainable parameters. The LoRA model we want to fine-tune is (1001 + 1000), which is 1/500th of the default model parameters.

lora <- lora_nn_linear(model, r = 1)Now let’s train the lora model on the new distribution.

train(lora, X2, Y2)

#> ( 10 ) Loss: 798.6073

#> ( 20 ) Loss: 485.8804

#> ( 30 ) Loss: 257.3518

#> ( 40 ) Loss: 118.4895

#> ( 50 ) Loss: 46.34769

#> ( 60 ) Loss: 14.46207

#> ( 70 ) Loss: 3.185689

#> ( 80 ) Loss: 0.4264134

#> ( 90 ) Loss: 0.02732975

#> ( 100 ) Loss: 0.001300132 If we look \(\Delta\Theta\) You can see a matrix full of ones, which is the exact transformation we applied to the weights.

delta_theta <- torch_matmul(lora$A, lora$B)*lora$scaling

delta_theta(1:5, 1:5)

#> torch_tensor

#> 1.0002 1.0001 1.0001 1.0001 1.0001

#> 1.0011 1.0010 1.0011 1.0011 1.0011

#> 0.9999 0.9999 0.9999 0.9999 0.9999

#> 1.0015 1.0014 1.0014 1.0014 1.0014

#> 1.0008 1.0008 1.0008 1.0008 1.0008

#> ( CPUFloatType{5,5} )( grad_fn = <SliceBackward0> )To avoid additional inference delays caused by separate delta calculations, the original model can be modified by adding the estimated deltas to the corresponding parameters. we are add_ How to modify weights on the spot.

with_no_grad({

model$weight$add_(delta_theta$t())

})Now, if we apply the base model to data from the new distribution, we get good performance, so we can say that the model is suitable for the new task.

nnf_mse_loss(model(X2), y2)

#> torch_tensor

#> 0.00130013

#> ( CPUFloatType{} )conclusion

Now that you know how LoRA works in this simple example, you can think about how it could work on large, pretrained models.

It turns out that most Transformers models have cleverly constructed these matrix multiplications, and applying LoRA only to these layers can achieve good performance while significantly reducing the fine-tuning cost. You can see the experiment in the LoRA paper.

Of course, the idea of LoRA is too simple to apply only to linear layers. You can apply this to convolutions, embedding layers, and indeed other layers.

Image from the LoRA paper by Hu et al.