Maintain strategic interoperability and flexibility

In the rapidly evolving generative AI environment, choosing the right components for your AI solution is critical. With the variety of large language models (LLMs), embedding models, and vector databases available, it is important to explore your options wisely. Because your decisions have important downstream impacts.

Certain embedding models may be too slow for certain applications. The system prompt approach may generate too many tokens, which can result in higher costs. There are many similar risks, but one that is often overlooked is obsolescence.

As more features and tools come online, organizations must prioritize interoperability to take advantage of the latest advancements in the field and retire outdated tools. To remain competitive in this environment, designing solutions that can seamlessly integrate and evaluate new components is essential.

Confidence in the reliability and safety of LLM in production is another important concern. Implementing measures to mitigate risks such as toxicity, security vulnerabilities, and inadequate response is essential to ensure user trust and compliance with regulatory requirements.

In addition to performance considerations, factors such as licensing, control, and security also factor into the choice between open source and commercial models.

- The commercial model offers convenience and ease of use, especially for rapid deployment and integration.

- The open source model offers more control and customization options, making it ideal for sensitive data and special use cases.

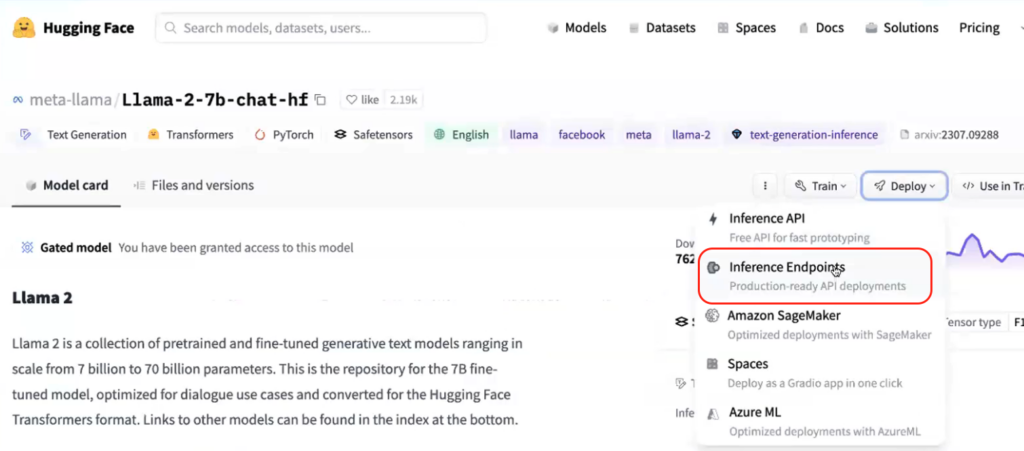

With all this in mind, it’s clear why platforms like HuggingFace are so popular among AI builders. Provides access to cutting-edge models, components, datasets, and tools for AI experiments.

A good example is the robust ecosystem of open source embedding models that have become popular for their flexibility and performance across a wide range of languages and tasks. Leaderboards, such as the Massive Text Embedding Leaderboard, provide valuable insight into the performance of different embedding models, helping users identify the option that best suits their needs.

The same can be said for the proliferation of various open source LLMs such as Smaug and DeepSeek, and open source vector databases such as Weaviate and Qdrant.

With these incredible choices, one of the most effective approaches to choosing the right tool and LLM for your organization is to immerse yourself in the real world of these models and experience their capabilities firsthand to ensure they align with your goals before you decide. It’s about distributing it. Combining DataRobot and HuggingFace’s vast library of generative AI components allows you to do just that.

Let’s look at how you can easily set up endpoints for your models, explore and compare LLMs, and deploy securely while enabling powerful model monitoring and maintenance capabilities in production.

Simplifying LLM Experiments with DataRobot and HuggingFace

This is a brief overview of the important steps in the process. You can follow the entire process step by step in this on-demand webinar hosted by DataRobot and HuggingFace.

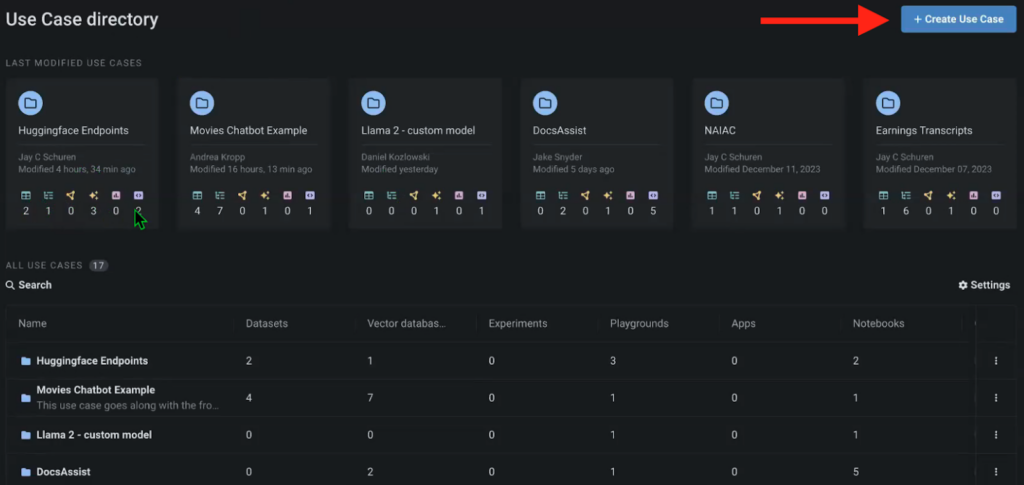

To get started, you need to create the required model endpoints in HuggingFace and set up a new use case in DataRobot Workbench. Think of a use case as an environment containing all kinds of different artifacts related to a specific project. From datasets and vector databases to LLM Playgrounds for model comparison and related notebooks.

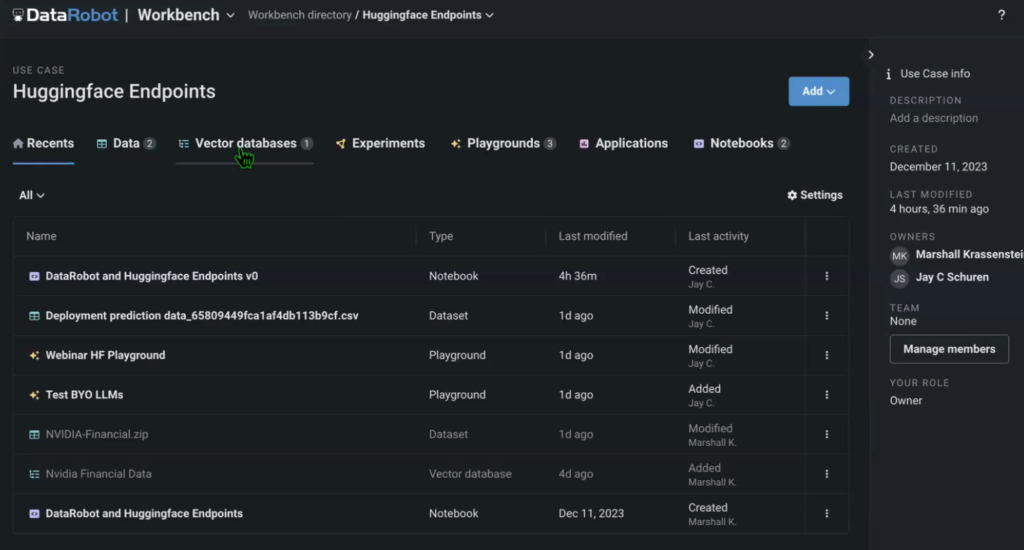

In this instance, we created a use case to experiment with different model endpoints for HuggingFace.

The use case also includes data (in this example, we used NVIDIA earnings reporting records as the source), which is a vector database generated using an embedding model called from HuggingFace, an LLM Playground against which to compare models. It is used as a source notebook to run the entire solution.

You can build use cases in DataRobot notebooks by using the default code snippets available in DataRobot and HuggingFace, and by importing and modifying existing Jupyter notebooks.

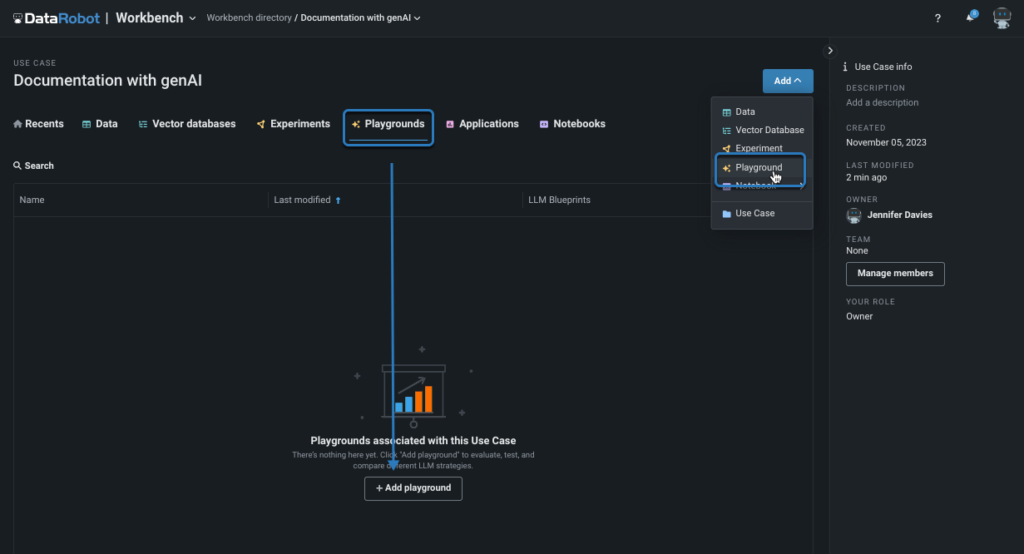

Now that you have all your source documents, your vector database, and all your model endpoints, it’s time to build a pipeline to compare them in LLM Playground.

Previously, you could perform comparisons directly on your laptop and display the output on your laptop. However, if you want to compare different models and their parameters, this experience is suboptimal.

LLM Playground is a UI that allows you to run multiple models in parallel, query them, and receive output simultaneously, while also allowing you to adjust model settings and further compare results. Another good example of experimentation is testing different embedding models, as this can change the performance of your solution based on the language used for prompts and output.

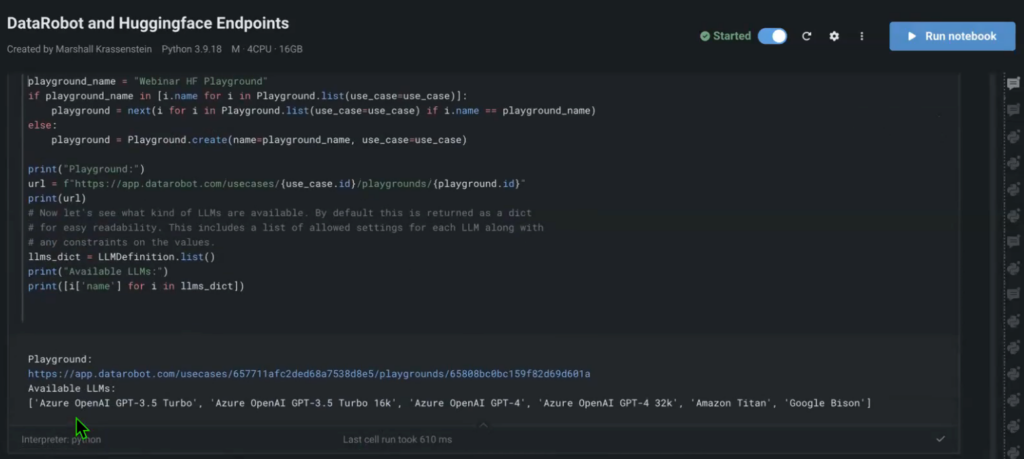

This process obfuscates many of the steps that must be performed manually in a notebook to run complex model comparisons. Playground also comes with several models out of the box (Open AI GPT-4, Titan, Bison, etc.) so you can compare your custom models and their performance to these benchmark models.

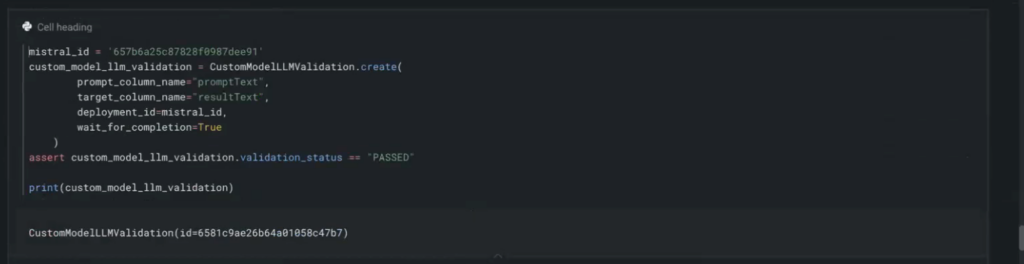

You can add each HuggingFace endpoint to your notebook using a few lines of code.

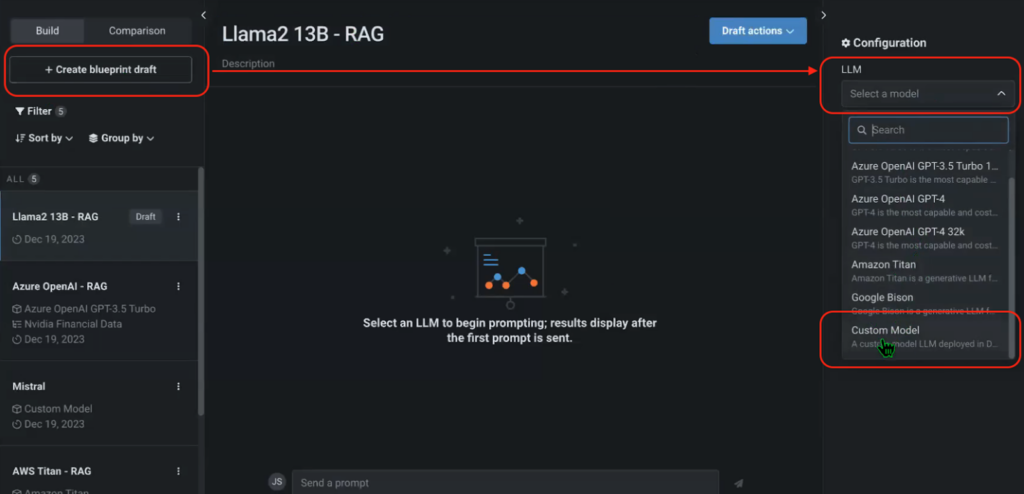

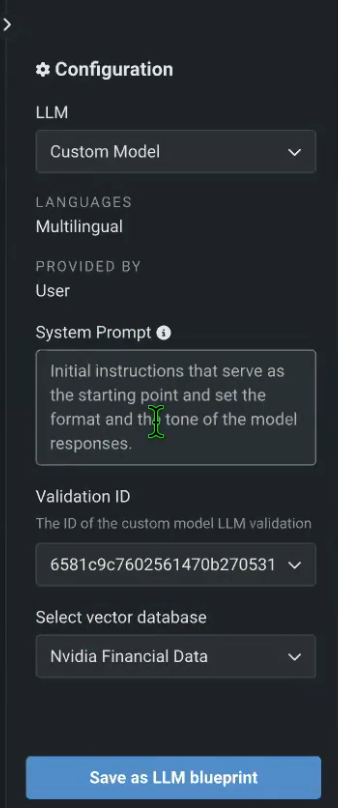

After your Playground is ready and you’ve added the HuggingFace endpoint, you can go back to the Playground and create a new blueprint and add your custom HuggingFace model to each. You can also configure the system prompt and select your preferred vector database, in this case NVIDIA Financial Data.

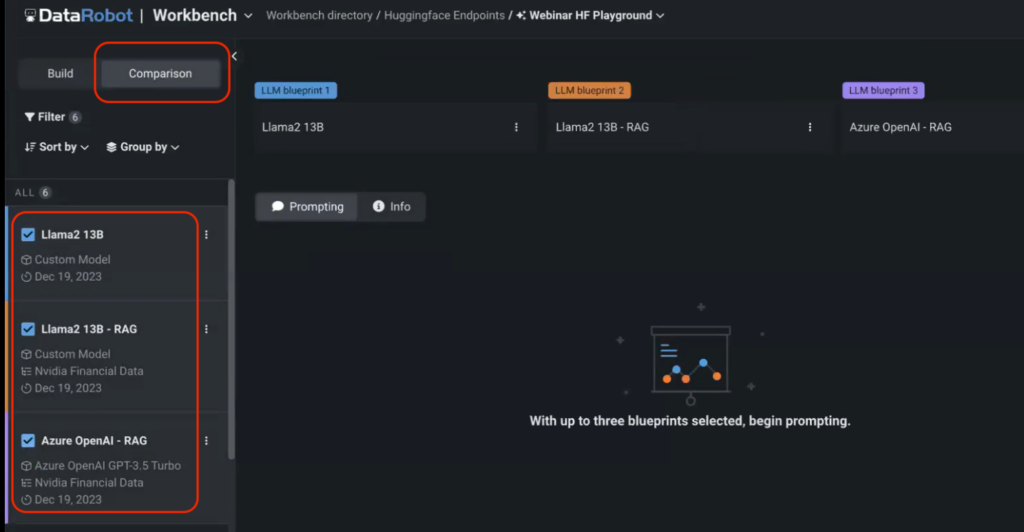

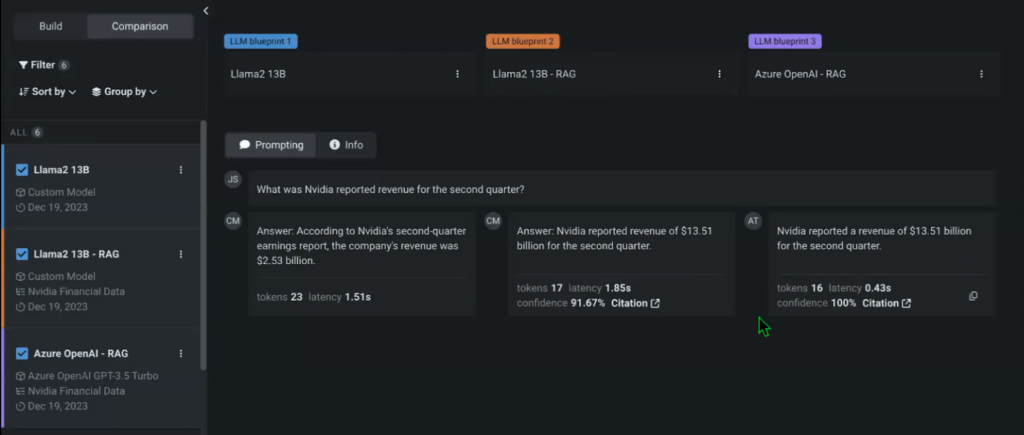

Figure 6, 7. Adding and configuring the HuggingFace endpoint in LLM Playground.

Once you’ve done this for all custom models deployed on HuggingFace, you can start comparing them appropriately.

Go to Playground’s Compare menu and select the model you want to compare. In this case, we compare two custom models available through the HuggingFace endpoint to the default Open AI GPT-3.5 Turbo model.

To compare the performance of RAG counterpart models, we did not specify a vector database for either model. You can then display messages to your model and compare the output in real time.

There are numerous settings and iterations you can add to your experiments using Playground, including temperature, maximum limits on completion tokens, and more. You can immediately see that non-RAG models without access to the NVIDIA Financial data vector database give different, incorrect responses as well.

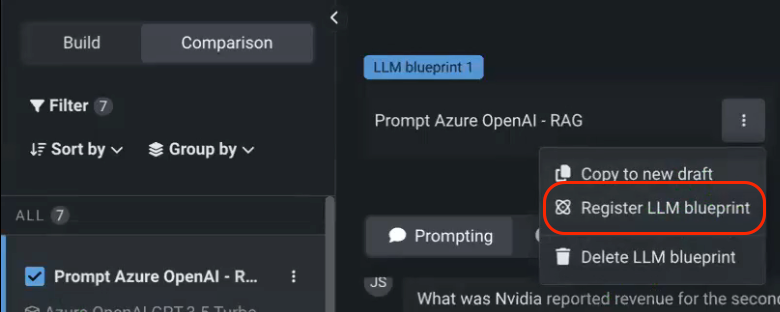

After you’ve completed your experiment, you can register your selected model in the AI Console, the hub for all your model deployments.

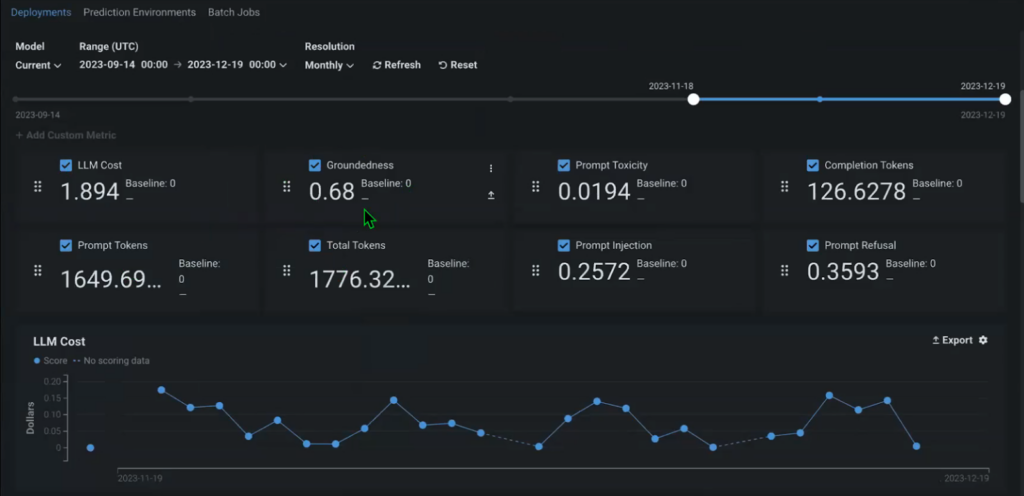

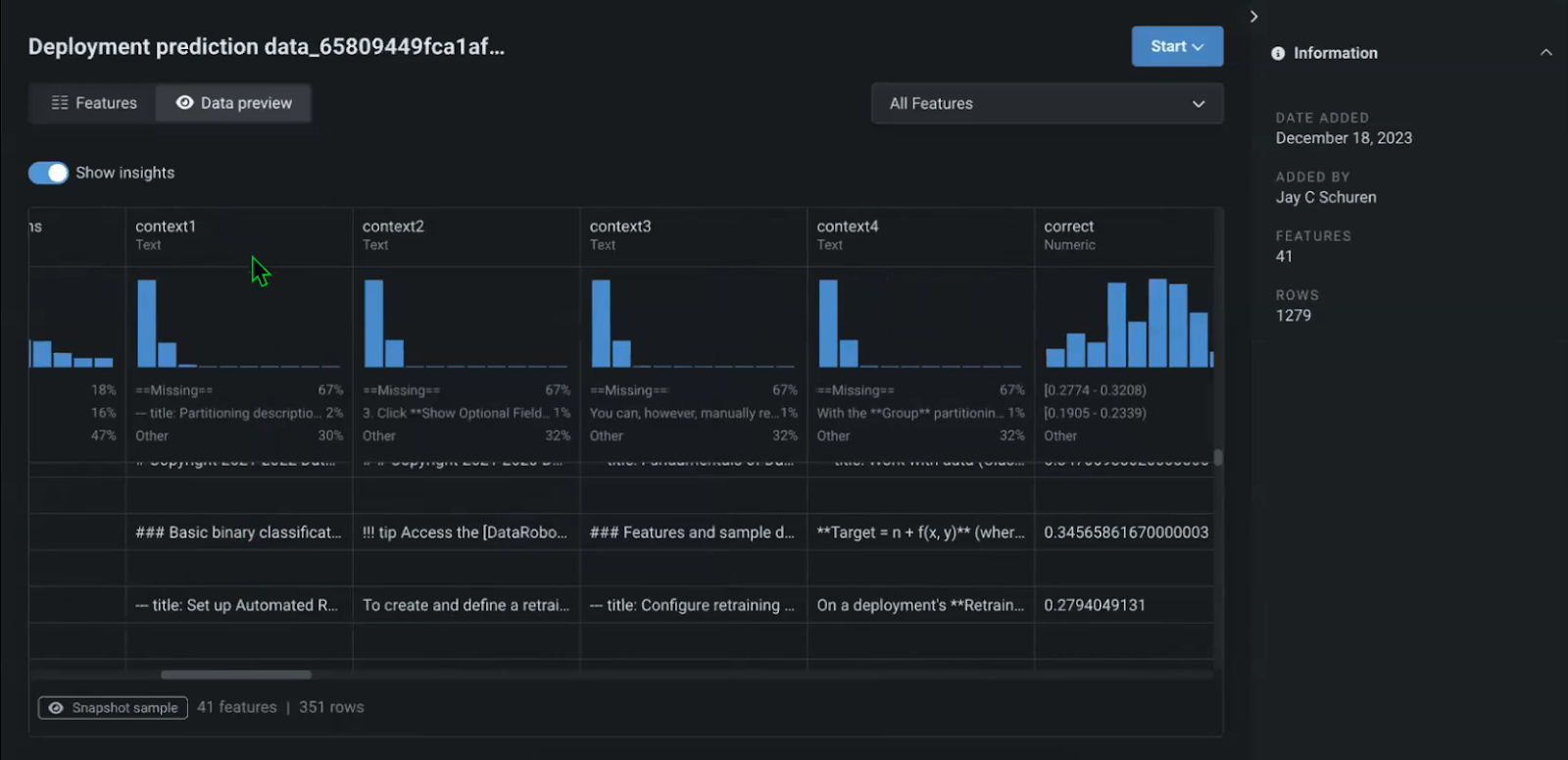

A model’s genealogy begins as soon as it is registered, tracking when it was created, for what purpose, and by whom. You can immediately start tracking basic metrics within the console to monitor performance, and even add custom metrics relevant to your specific use cases.

For example, evidence can be an important long-term indicator that allows you to understand how well the context (source documents) you provide fits the model (what percentage of the source documents were used to generate the answer). This will help you understand if you are using actual/relevant information in your solution and update it if necessary.

This also tracks the entire pipeline for each question and answer, including the context that is retrieved and passed to the model’s output. This also includes the original document for each specific answer.

How to Choose the Right LLM for Your Use Case

Overall, the process of testing LLMs and figuring out which LLM is right for your use case is a multifaceted endeavor that requires careful consideration of a variety of factors. You can apply different settings to each LLM to drastically change its performance.

This highlights the importance of experimentation and continuous iteration to ensure robustness and high efficiency of the deployed solution. Only by comprehensively testing models against real-world scenarios can users identify potential limitations and areas for improvement before the solution is put into production.

Maximizing the efficiency and reliability of generative AI solutions and providing accurate and relevant responses to user queries requires a robust framework that combines real-time interaction, backend configuration, and thorough monitoring.

By combining HuggingFace’s diverse library of generative AI components with DataRobot’s integrated approach to model experimentation and deployment, organizations can rapidly iterate and deliver real-world-ready, production-level generative AI solutions.

About the author

Nathaniel Daly is a senior product manager at DataRobot responsible for AutoML and time series products. He focuses on bringing advances in data science to users so they can leverage this value to solve real-world business problems. He earned a degree in Mathematics from the University of California, Berkeley.

Meet Nathaniel Daley