In the rapidly evolving generative AI (GenAI) landscape, data scientists and AI builders are constantly looking for powerful tools to create innovative applications using large language models (LLMs). DataRobot is introducing an advanced suite of LLM assessments, tests, and metrics to Playground, providing unique features that set it apart from other platforms.

These metrics, including fidelity, accuracy, citations, Rouge-1, cost, and latency, provide a comprehensive and standardized approach to verifying the quality and performance of GenAI applications. By leveraging these metrics, customers and AI builders can develop reliable, efficient, and high-value GenAI solutions with confidence, accelerate time to market, and gain competitive advantage. In this blog post, we’ll take a closer look at these metrics and how they can help you leverage the full potential of your LLM within the DataRobot platform.

Explore comprehensive evaluation indicators

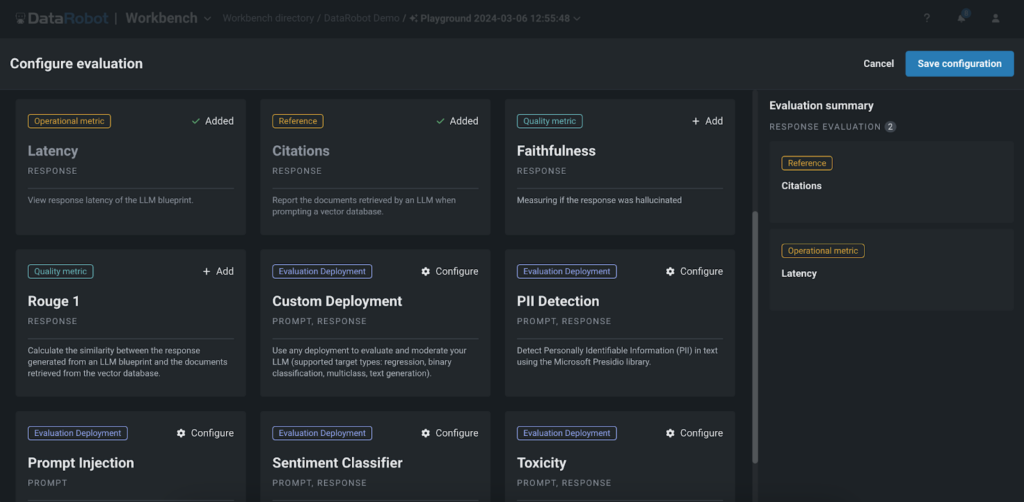

DataRobot’s Playground provides a comprehensive set of evaluation metrics that allow users to benchmark, compare performance, and rank Retrieval-Augmented Generation (RAG) experiments. These metrics include:

- faithfulness: This indicator assesses how accurately the responses generated by LLM reflect the data retrieved from the vector database, ensuring the reliability of the information.

- conclusion: The accuracy metric evaluates the accuracy of the LLM output by comparing the generated responses to the actual ones. This is especially useful in applications where accuracy is critical, such as medical, financial, or legal domains, so customers can trust the information provided by GenAI applications.

- Quotation: This metric tracks the documents retrieved by LLM when requesting a vector database, providing insight into the sources used to generate the response. This helps users ensure that their applications utilize the most appropriate sources and improves the relevance and credibility of the content produced. Playground’s guard model can help you determine the quality and relevance of the citations you use in your LLM.

- Rouge-1: The Rouge-1 metric calculates the overlap of unigrams (each word) between generated responses and documents retrieved from a vector database, allowing users to evaluate the relevance of generated content.

- Cost and Latency: We also provide metrics to track the costs and latency associated with running LLM, allowing users to optimize their experiments for efficiency and cost-effectiveness. These metrics help organizations find the right balance between performance and budget constraints, ensuring the feasibility of deploying GenAI applications at scale.

- Guard Model: Our platform allows users to evaluate LLM responses by applying protected models from the DataRobot Registry or custom models. Models such as toxicity and PII detectors can be added to the playground to evaluate each LLM output. This makes it easy to test guard models against LLM responses before deploying them to production.

efficient experiments

DataRobot’s Playground allows customers and AI builders to freely experiment with different LLMs, chunking strategies, embedding methods, and prompting methods. Evaluation metrics play an important role in helping users efficiently navigate this experimentation process. DataRobot provides a standardized set of evaluation metrics, allowing users to easily compare the performance of different LLM configurations and experiments. This allows customers and AI builders to make data-driven decisions when choosing the best approach for their specific use case, saving time and resources in the process.

For example, by experimenting with different chunking strategies or embedding methods, users have been able to significantly improve the accuracy and relevance of GenAI applications in real-world scenarios. This level of experimentation is critical to developing high-performance GenAI solutions tailored to specific industry requirements.

Optimization and user feedback

Playground’s evaluation metrics serve as a useful tool for evaluating the performance of GenAI applications. By analyzing metrics like Rouge-1 or Citations, customers and AI builders can identify areas where their models can be improved, such as strengthening the relevance of generated responses or ensuring that the application utilizes the most appropriate sources in the vector database. . These metrics provide a quantitative approach to assessing the quality of the generated responses.

In addition to rating metrics, DataRobot’s Playground allows users to provide direct feedback on responses generated through like/dislike ratings. This user feedback is the primary way to create fine-tuned datasets. Users can review the responses generated by LLM and vote on their quality and relevance. Upvoted responses are then used to generate datasets to fine-tune GenAI applications. This helps us learn your preferences and generate more accurate and relevant responses in the future. This means you can collect as much feedback as you need to create a comprehensive, fine-tuned dataset that reflects real user preferences and needs.

By combining evaluation metrics and user feedback, customers and AI builders can make data-driven decisions to optimize their GenAI applications. You can use metrics to identify high-performing responses and include them in your fine-tuning dataset to ensure your model learns from the best examples. This iterative process of evaluation, feedback, and fine-tuning allows organizations to continually improve their GenAI applications and deliver high-quality, user-centric experiences.

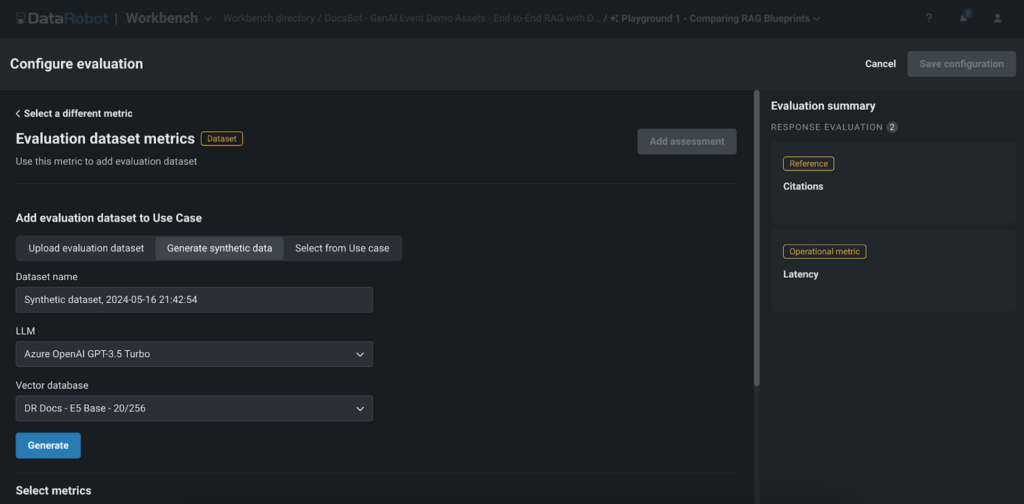

Generate synthetic data for rapid evaluation

One of the great features of DataRobot’s Playground is the generation of synthetic data for quick-answer assessments. This feature allows users to quickly and easily generate question and answer pairs based on the user’s vector database, enabling thorough evaluation of the performance of RAG experiments without the need to manually generate data.

Generating synthetic data offers several key benefits:

- Save time: Manually generating large data sets can be time-consuming. DataRobot’s synthetic data generation automates this process, saving valuable time and resources and enabling customers and AI builders to rapidly prototype and test GenAI applications.

- Scalability: The ability to generate thousands of question and answer pairs allows users to thoroughly test RAG experiments and ensure robustness across a wide range of scenarios. This comprehensive testing approach helps customers and AI builders deliver high-quality applications that meet the needs and expectations of end users.

- Quality assessment: By comparing generated responses to synthetic data, users can easily evaluate the quality and accuracy of GenAI applications. This accelerates the time to value of GenAI applications, allowing organizations to bring innovative solutions to market more quickly and gain a competitive advantage in their industries.

While synthetic data provides a fast and efficient way to evaluate GenAI applications, it is important to consider that it cannot always capture the full complexity and nuance of real-world data. Therefore, it is important to use synthetic data along with real user feedback and other evaluation methods to ensure the robustness and efficiency of GenAI applications.

conclusion

DataRobot’s advanced LLM evaluation, testing, and evaluation metrics in Playground provide customers and AI builders with a powerful set of tools to create high-quality, reliable, and efficient GenAI applications. By providing comprehensive evaluation metrics, efficient experimentation and optimization capabilities, integration of user feedback, and synthetic data generation for rapid evaluation, DataRobot helps users leverage the full potential of LLM and produce meaningful results.

Increased confidence in model performance, faster time to value, and the ability to fine-tune applications allow customers and AI builders to focus on delivering innovative solutions that solve real-world problems and create value for end users. With advanced evaluation metrics and unique features, DataRobot’s Playground is a game changer in the GenAI landscape, helping organizations push the boundaries of what is possible with large-scale language models.

Don’t miss the opportunity to optimize your project with the most advanced LLM testing and evaluation platform. Visit DataRobot’s Playground today and begin your journey to building great GenAI applications that will truly stand out in the competitive AI landscape.

About the author

Nathaniel Daly is a senior product manager at DataRobot responsible for AutoML and time series products. He focuses on bringing advances in data science to users so they can leverage this value to solve real-world business problems. He earned a degree in Mathematics from the University of California, Berkeley.

Meet Nathaniel Daley