With new enhancements to our Azure OpenAI Service Provisioned offering, we are taking great strides in making AI accessible and enterprise-ready.

In today’s rapidly evolving digital environment, businesses need more than powerful AI models. You need AI solutions that are adaptable, reliable, and scalable. With upcoming releases in the Data Plane and new enhancements to provisioned offerings in the Azure OpenAI service, we are making great strides in making AI widely available and enterprise-ready. These capabilities represent a fundamental shift in how organizations deploy, manage, and optimize generative AI models.

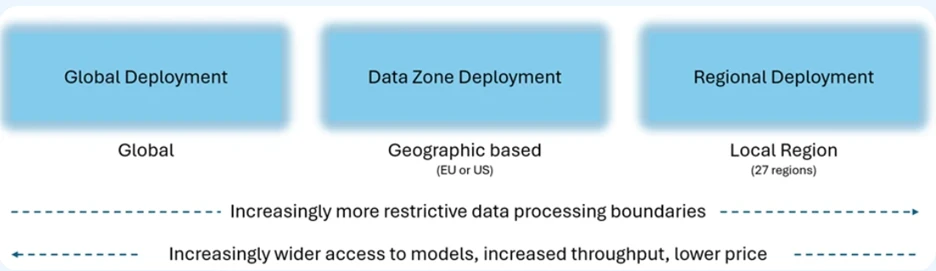

With the launch of Azure OpenAI service data regions in the European Union and the United States, enterprises can now more easily scale their AI workloads while complying with regional data residency requirements. Historically, differences in model regional availability often required customers to manage multiple resources, slowing development and complicating operations. Azure OpenAI service data planes can remove this friction by providing flexible, multi-region data processing while ensuring data is processed and stored within selected data boundaries.

This is also a compliance win that allows enterprises to seamlessly scale their AI operations across geographies, optimizing both performance and reliability without having to navigate the complexities of managing traffic across disparate systems.

Leya, a technology startup building a genAI platform for legal professionals, has been exploring data plane deployment options.

“The Azure OpenAI Service Data Zones deployment option provides a cost-effective way for Leya to securely scale AI applications to thousands of lawyers, ensuring compliance and peak performance. Get quick access to the latest Azure OpenAI innovations to help you achieve better customer quality and control.“—Sigge Labor, CTO, Leah

Data Planes will be available for both standard (PayGo) and provisioned products starting this week, November 1, 2024.

Industry leading performance

Enterprises rely on predictability, especially when deploying mission-critical applications. This is why we are introducing a 99% latency service level agreement for token creation. This latency SLA ensures that tokens are created at a faster and more consistent rate, especially at high volumes.

Provisioned offers provide predictable performance for your applications. In e-commerce, healthcare, and financial services, the ability to rely on low-latency, highly reliable AI infrastructure directly leads to better customer experiences and more efficient operations.

Lower startup costs

To make it easier to test, scale, and manage, we are reducing hourly prices for Provisioned Global and Provisioned Data Zone deployments starting November 1, 2024. These cost savings allow customers to take advantage of these new features without incurring higher costs. expenses. Provisioned products continue to offer discounts for monthly and annual commitments.

| Deployment Options | Hourly PTU | 1 month reservation per PTU | 1 year reservation per PTU |

| Provisioned Global | Current: $2.00 per hour November 1, 2024: $1.00 per hour |

$260 per month | $221 per month |

| Provisioned data regionnew | November 1, 2024: $1.10 per hour | $260 per month | $221 per month |

We’re also reducing the minimum deployment entry point for provisioned global deployments by 70% and expanding increments by up to 90%, lowering the barrier for enterprises to launch provisioned products earlier in the development lifecycle.

Minimum deployment quantity and increment for provisioned products

| model | global | data zone new | region |

| GPT-4o | Minimum: increase |

Minimum: 15 increment 5 |

Minimum: 50 increment 50 |

| GPT-4o-mini | Minimum: increase: |

Minimum: 15 increment 5 |

Minimum: 25 Increment: 25 |

For developers and IT teams, this means faster deployment times and less friction when moving from standard to provisioned products. As your business grows, these simple transitions are essential to scale your AI applications globally while remaining agile.

Efficiency through Caching: A Breakthrough Solution for High-Volume Applications

Another new feature is Prompt Caching, which provides cheaper and faster inference for repetitive API requests. Cached tokens are discounted by 50% for Standard. For applications that frequently send the same system prompts and instructions, these improvements provide significant cost and performance benefits.

By caching prompts, organizations can maximize throughput while reducing costs by eliminating the need to repeatedly reprocess the same requests. This is especially useful in high-traffic environments where even small performance improvements can lead to real business benefits.

A new era of model flexibility and performance

One of the key benefits of provisioned products is flexibility, with one simple hourly, monthly, and annual price that applies to all available models. We’ve also heard feedback that it’s difficult to understand how many tokens per minute (TPM) you’re getting for each model in provisioned deployments. We now provide a simplified view of the number of input and output tokens per minute for each provisioned deployment. Customers no longer need to rely on detailed conversion tables or calculators.

We maintain the flexibility our customers love with our provisioned products. Monthly and annual commitments allow you to change models and versions, such as the GPT-4o and GPT-4o-mini, within the reservation period without losing any discounts. This agility allows companies to experiment, iterate, and evolve their AI deployments without incurring unnecessary costs or reconfiguring their infrastructure.

Running enterprise readiness

Azure OpenAI’s ongoing innovation is not just theoretical. It is already producing results in a variety of industries. For example, companies like AT&T, H&R Block, Mercedes, and others are using the Azure OpenAI service not just as a tool, but as a transformative asset that transforms how they operate and engage with their customers.

Beyond the Model: An Enterprise-Level Promise

It is clear that the future of AI is about more than just delivering the latest models. Powerful models like GPT-4o and GPT-4o-mini provide the foundation, but what makes Azure OpenAI services enterprise-grade is the supporting infrastructure: provisioned products, data plane deployment options, SLAs, caching, and simplified deployment flows. .

Microsoft’s vision is to provide cutting-edge AI models as well as enterprise-grade tools and support that enable businesses to scale these models confidently, securely, and cost-effectively. From supporting low-latency, high-reliability deployments to providing flexible, simplified infrastructure, Azure OpenAI services help enterprises fully embrace the future of AI-driven innovation.

Get started now

As the AI landscape continues to evolve, the need for scalable, flexible, and reliable AI solutions becomes more critical to enterprise success. With the latest enhancements to the Azure OpenAI service, Microsoft is delivering on this promise by giving customers not only access to world-class AI models, but also the tools and infrastructure to operate them at scale.

Now is the time for enterprises to unlock the full potential of generative AI with Azure, moving beyond experiments to real enterprise-grade applications that drive measurable results. Whether you’re scaling virtual assistants, developing real-time voice applications, or transforming customer service with AI, Azure OpenAI service provides the enterprise-grade platform you need to innovate and grow.