Speed, scale, and collaboration are essential for AI teams. However, limited structured data, computing resources, and centralized workflows often get in the way.

Whether you’re a DataRobot customer or an AI practitioner looking for smarter ways to prepare and model large datasets, new tools like incremental learning, optical character recognition (OCR), and improved data preparation are helping you remove obstacles to building more accurate models. It helps. It takes less time.

New features in the DataRobot Workbench environment include:

- Incremental learning: Efficiently model large amounts of data by increasing transparency and control.

- Optical Character Recognition (OCR): Instantly transform unstructured scanned PDFs into usable data for predictive and generative AI use cases.

- Collaboration made easier: Work with your team in a unified space with shared access to data preparation, generative AI development, and predictive modeling tools.

Efficiently model large amounts of data through incremental learning

Building models on large data sets often results in unexpected computational costs, inefficiencies, and prohibitive costs. Incremental learning removes these barriers, allowing you to model large data volumes with precision and control.

Instead of processing the entire data set at once, incremental learning runs successive iterations over the training data, using only as much data as needed to achieve optimal accuracy.

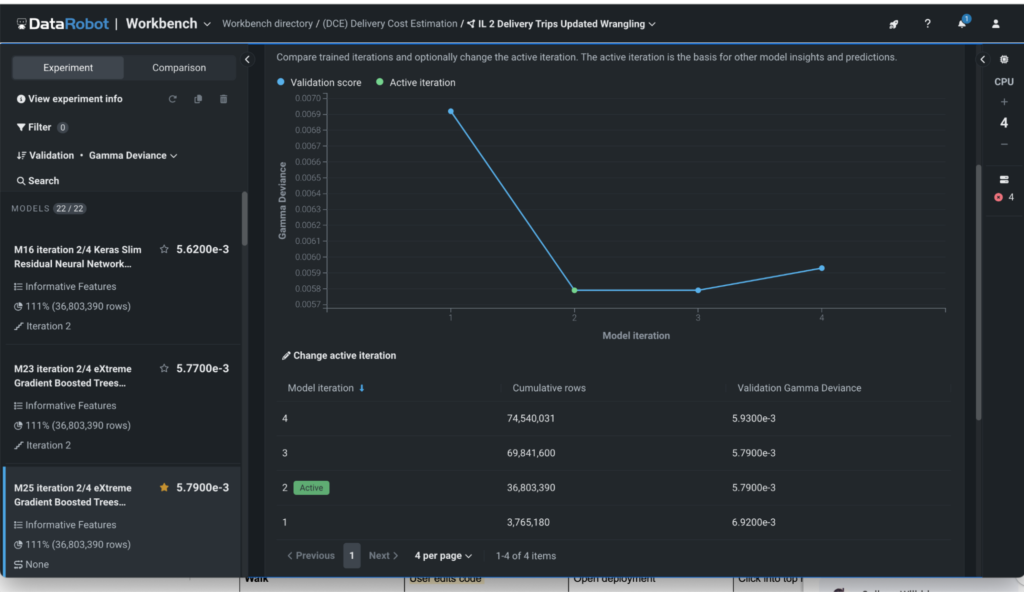

Each iteration is visualized as a graph (see Figure 1) that allows you to track the number of rows processed and the accuracy obtained based on the selected metrics.

Key benefits of progressive learning:

- Process only data that produces results..

Incremental learning automatically stops operations when diminishing returns are detected, ensuring that only enough data is available to achieve optimal accuracy. At DataRobot, each iteration is tracked so you can clearly see how much data is producing the most powerful results. You are always in control and can customize and run additional iterations to get it right.

- Train with the right amount of data

Incremental learning prevents overfitting by iterating over smaller samples, so the model learns patterns as well as the training data.

- Automate complex workflows:

Make sure this data provisioning is fast and error-free. Advanced code-first users can go one step further and use stored weights to simplify retraining by only processing new data. This eliminates the need to rerun the entire dataset from scratch, reducing errors caused by manual setup.

When to best use incremental learning

There are two main scenarios where incremental learning promotes efficiency and control.

- One-time modeling task

You can customize early stopping for large datasets to avoid unnecessary processing, prevent overfitting, and ensure data transparency.

- Dynamic model updated regularly

For models that react to new information, advanced code-first users can build pipelines that add new data to the training set without a full rerun.

Unlike other AI platforms, it can control large-scale data operations through incremental learning, making it faster, more efficient, and less expensive.

How Optical Character Recognition (OCR) Prepares Unstructured Data for AI

Access to large amounts of available data can be a barrier to building accurate predictive models and powering search augmented generation (RAG) chatbots. This is especially true because 80 to 90 percent of a company’s data is unstructured, which can be difficult to process. OCR removes these barriers by converting scanned PDFs into a searchable format that can be used by predictive and generative AI.

How it works

OCR is a code-first feature within DataRobot. By calling the API, you can convert a ZIP file of scanned PDFs into a dataset of PDFs containing text. The extracted text is embedded directly in the PDF document and can be accessed through the Document AI feature.

How OCR Powers Multimodal AI

The new OCR functionality isn’t just for generative AI or vector databases. It also simplifies AI-enabled data preparation for multimodal predictive models, enabling you to gain richer insights from diverse data sources.

Multimodal Predictive AI Data Preparation

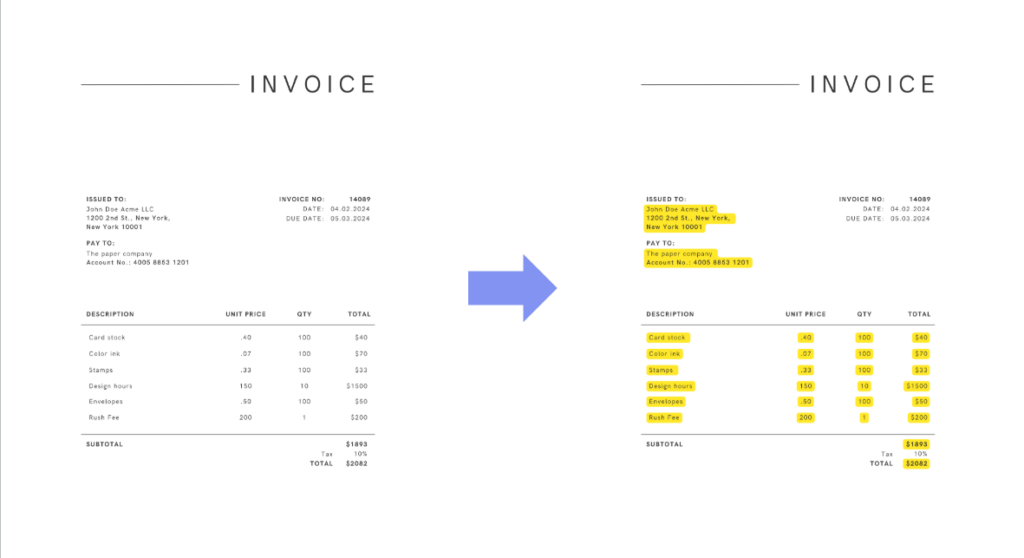

Quickly convert scanned documents into PDF data sets containing text. This allows you to use document AI capabilities to extract key information and build features for predictive models.

For example, let’s say you want to forecast your operating costs, but you only have access to scanned invoices. By combining OCR, document text extraction, and integration with Apache Airflow, you can turn these invoices into a powerful data source for your models.

Enhancing RAG LLM with Vector Database

Large vector databases support more accurate retrieval augmented generation (RAG) for LLM, especially when supported by larger and richer datasets. OCR plays a key role in converting scanned PDFs into PDFs containing text, using that text as vectors to provide more accurate LLM responses.

Real use cases

Imagine building a RAG chatbot that answers complex employee questions. Employee benefits documents are often dense and difficult to search. Using OCR to prepare documents for generative AI powers your LLM, allowing employees to get fast, accurate answers in a self-service format.

WorkBench Migration Drives Collaboration

Collaboration can be one of the biggest obstacles to rapid AI delivery, especially when teams must work across multiple tools and data sources. DataRobot’s NextGen WorkBench solves this problem by unifying key predictive and generative modeling workflows in one shared environment.

This migration means you can build both predictive and generative models in a single workspace, using both graphical user interfaces (GUIs) and code-based notebooks and code spaces. It also delivers powerful data preparation capabilities in the same environment, allowing teams to collaborate on end-to-end AI workflows without switching tools.

Accelerate data preparation during model development

Data preparation often takes up to 80% of a data scientist’s time. NextGen WorkBench simplifies this process by:

- Data quality detection and automated data healing: Automatically identifies and resolves issues such as missing values, outliers, and formatting errors.

- Automated feature detection and reduction: Reduce the need for manual feature engineering by automatically identifying key features and removing low-impact features.

- Out-of-the-box visualization of data analysis: Instantly create interactive visualizations to explore your data sets and identify trends.

Improve data quality and instantly visualize problems.

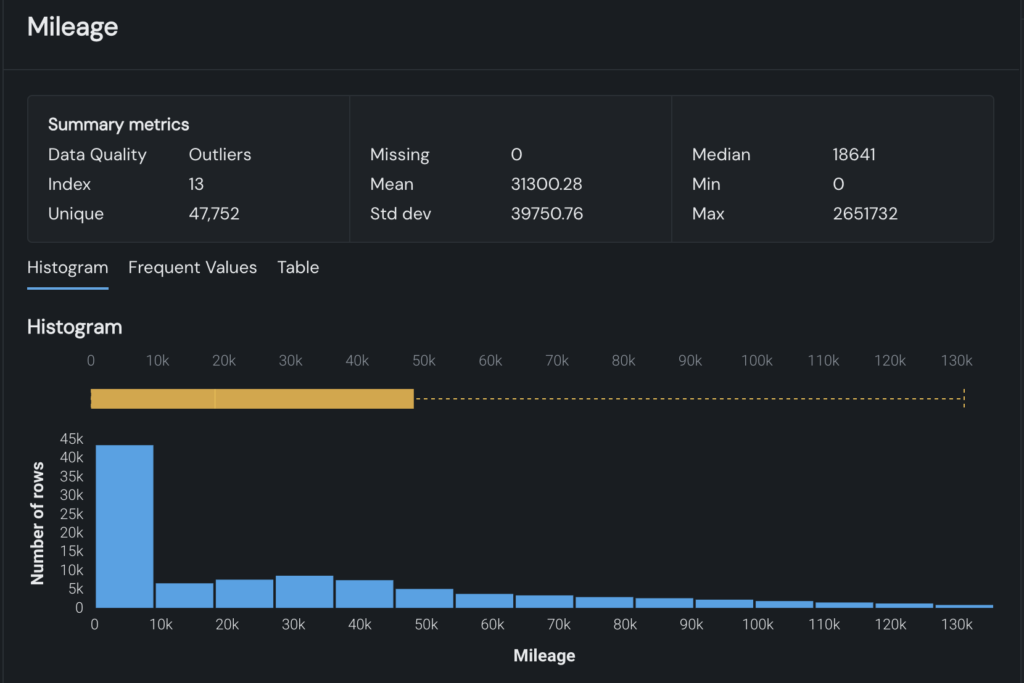

Data quality issues such as missing values, outliers, and format errors can slow AI development. NextGen WorkBench solves this problem with automated scans and visual insights that save time and reduce manual tasks.

Now, when you upload your dataset, an automatic scan will check for key data quality issues, including:

- outlier

- Multiple categorical format error

- Inlier

- exceed 0

- disguised missing values

- target leak

- Missing images (image datasets only)

- Personally Identifiable Information

These data quality checks are combined with out-of-the-box exploratory data analysis (EDA) visualizations. New data sets are automatically visualized in interactive graphs so you can immediately see data trends and potential problems without having to create charts yourself. Figure 3 below shows how quality issues are highlighted directly within the graph.

Automate feature detection and reduce complexity

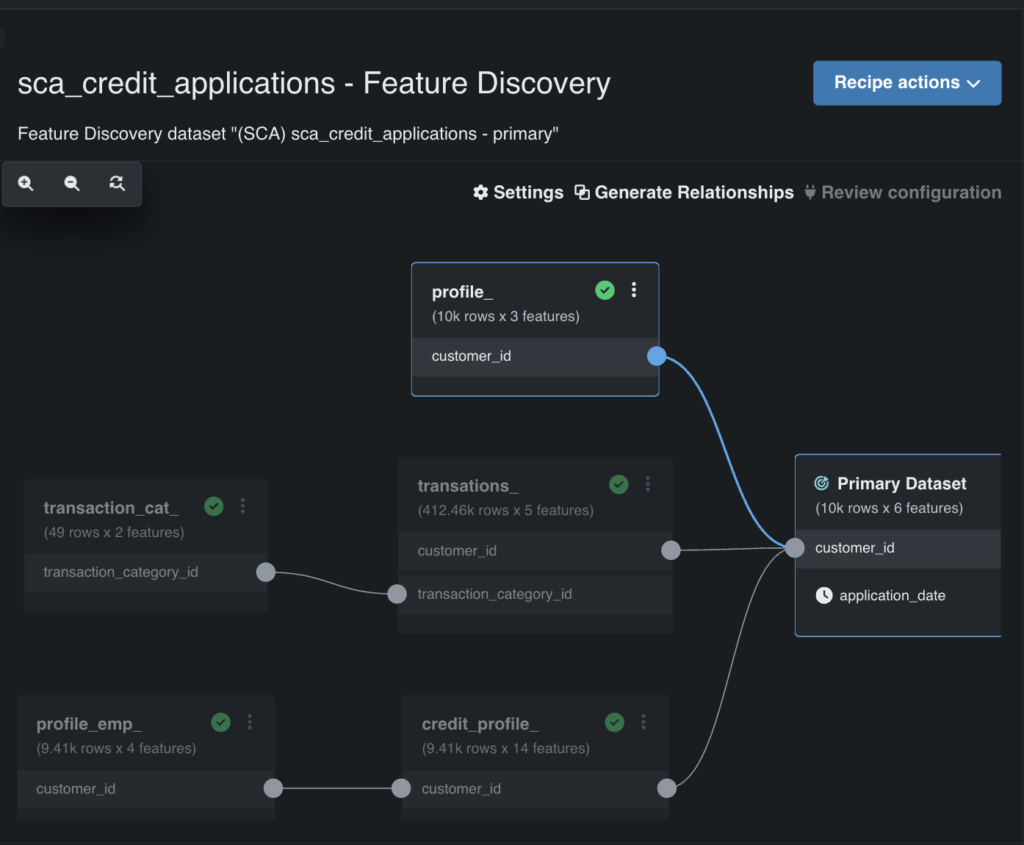

Automated feature detection simplifies feature engineering, making it easier to join secondary datasets, detect key features, and remove less influential features.

This feature allows you to scan all auxiliary data sets for similarities, such as customer IDs (see Figure 4), and automatically join them to the training data set. It also reduces unnecessary complexity by identifying and eliminating low-impact features.

You can maintain full control with the ability to review and customize what features are included or excluded.

Don’t let slow workflows slow you down

Data preparation doesn’t have to take up 80% of your time. Disconnected tools don’t have to slow down your progress. And unstructured data doesn’t have to be out of reach.

With NextGen WorkBench, you have the tools to move faster, simplify workflows, and build with less manual effort. These features are already available, all you have to do is take advantage of them.

If you’re ready to see what’s possible, explore the NextGen experience with a free trial.

About the author

Meet Ezra Berger