In iOS 18, Apple’s Notes and Voice Memos apps get a new audio transcription feature. Here’s everything you need to know about the different types of audio transcription, how they compare, and what Apple’s implementation brings to the table.

Apple’s latest assortment of operating systems lets users transcribe audio directly within Notes and Voice Memos, in real-time and without an internet connection.

iOS 18.1, iPadOS 18.1 and macOS Sequoia 15.1 also introduce support for Apple Intelligence, meaning that users will be able to summarize and edit transcriptions through AI, though only on more recent devices.

To better explain the significance of these new features, as well as their potential impact on the third-party app market, it’s important to have an a basic understanding of audio transcription as a whole, and the different types of speech-to-text processing that exist.

The process of converting recorded speech into written text is known as audio transcription. It’s commonly used in a variety of different fields and industries and has always been an essential tool for multiple types of users, including academics, business professionals, journalists as well as students.

Audio transcription makes it easy to find key information contained within an audio recording. Rather than listening to an entire recording of a speech or interview, for example, a journalist can easily search through a transcript and find the necessary details. General-purpose note-taking is also made significantly easier with audio transcription.

It’s also often used as an accessibility tool, as transcription assists users with auditory or other impairments. Students who have difficulties understanding their professor or following along during lectures may especially benefit more from real-time audio transcription, rather than the post-processing of recorded audio.

In general, there are two possible approaches to audio transcription — on-device and cloud-based. Each has its own advantages and shortcomings that users have to take into account when deciding which app is right for them.

With on-device audio transcription, audio is processed locally on the user’s hardware and converted into text without connecting to an external server. This ultimately preserves user privacy, as recordings and transcripts are not sent anywhere.

Cloud-based audio transcription works by sending audio files over the internet to specialized servers with transcription software. Once a file has been transcribed, text output is sent back to the end user. This type of transcription is often less CPU-intensive and is available on a broad range of devices.

When it comes to audio transcription, users have multiple apps and services to choose from. Some apps utilize on-device audio processing, while others are web-based services that transcribe audio remotely, through the use of external servers. Ultimately, there are pros and cons to each approach, as well as unique use cases for both on-device transcription and cloud-based processing.

Offline transcription — What it’s used for and why

Offline transcription is ideal for audio recordings that contain highly sensitive information. In journalism, for instance, this would help secure the personal information of individuals speaking to the press about confidential matters.

Transcribing audio on-device means that there’s effectively no chance of accidentally transmitting sensitive information during the transcription process.

In theory, no unauthorized third parties can listen in on these recordings or view the transcribed files, which remains a possibility with transcription services requiring an active internet connection.

Recordings of business meetings are also likely to contain sensitive info such as corporate plans, marketing, branding, and investment strategies, product development details, and so on. This makes on-device transcription the best option for these types of recordings.

Recordings with medical information, such as therapy sessions or medical notes, obviously contain private and often sensitive information. On-device processing would ensure the privacy of all individuals involved and would be especially useful for public figures and celebrities.

In addition to this, offline audio transcription can also be used for journaling. When visiting remote or rural areas with no internet connectivity, only an on-device transcription tool can process audio. Since there are no network-related requirements, general-purpose note-taking is also made easier with offline audio transcription.

The importance of real-time audio transcription, why cloud-based apps are sometimes useful

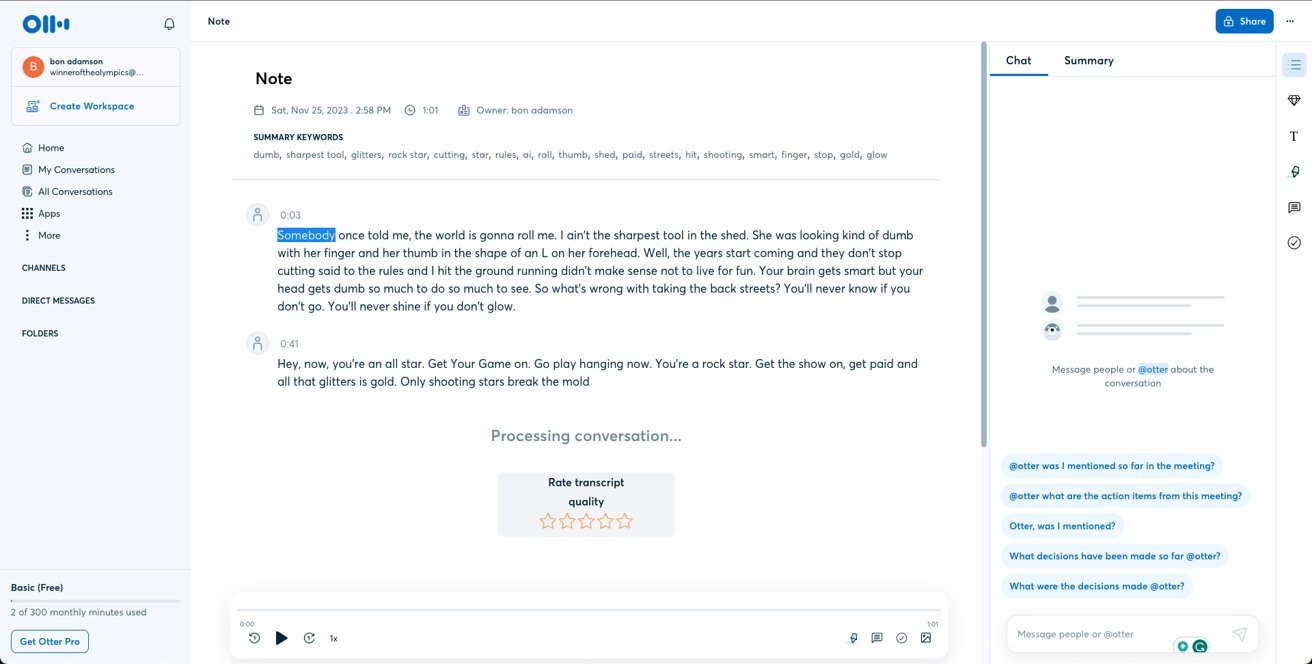

Online-only audio transcription services, such as Otter.ai, can process audio in real-time. This means that the service can transcribe meetings, conference calls, lectures, live streams, and podcasts right as they’re happening.

Otter.ai is a cloud-based service that can transcribe meetings in real-time and can even identify speakers.

In journalism, real-time transcription is especially useful for live events. This can include press conferences, award ceremonies, speeches, announcements from companies and government officials, product launch events, quarterly earnings calls related to select companies, and much more.

During events like these, a journalist may be tasked with writing a story based on a key sentence from an event, one that contains an important statistic or data point. This is where real-time transcription is absolutely necessary, as timing is crucial.

Other types of users, such as students, may need real-time transcription for more efficient note-taking during lectures. By seeing individual words and key sentences transcribed right away, it becomes easier to identify core concepts, ideas, or phrases of note within a lecture.

Many offline transcription apps cannot provide real-time audio transcripts. On the other hand, Apple’s iOS 18, although still in beta, introduces offline real-time transcription in the built-in Notes app. This makes it a potential competitor for certain cloud-based audio transcription services.

Apple’s offline audio transcription is available on different platforms, though obviously only on Apple-branded systems and on only the company’s latest software.

Web-based products such as Otter.ai are available cross-platform. This means that users can transcribe audio in real time on any device with a modern web browser, whether it be a phone, a laptop, or a tablet.

Many third-party offline transcription apps, such as those based on OpenAI’s Whisper, are limited to a singular platform. In some instances, applications are Mac-only, while others are available exclusively on Windows or iPhone.

OpenAI’s Whisper models and their use for on-device transcription

The recent popularity of artificial intelligence means that there’s an ever-increasing number of applications and generative AI models that can process audio, video, images, and text files. Some AI models are used for on-device audio transcription, as is the case with OpenAI’s Whisper.

OpenAI’s Whisper model was introduced in 2022, and is open-source. Image source: OpenAI.com

Whisper, released in 2022, is a particularly popular piece of AI-powered transcription software. Whisper is open-source, meaning that its AI models are freely available on OpenAI’s GitHub page for anyone to download and use.

The software was trained on more than 680,000 hours of audio and features multiple AI models that produce transcriptions of varying accuracy and at different speeds. Whisper can also be used for translation, as it supports 99 different languages.

Whisper’s AI models make it possible to transcribe audio entirely on-device, without an active internet connection. This comes at the cost of storage space, though, as the Whisper AI models can be up to 2GB in size, which is arguably a lot for a computer with a lower storage capacity such as 256GB.

It’s worth noting, however, that installing Whisper directly from OpenAI’s GitHub page is not as easy as installing any GUI-type macOS app. Some users might find the task daunting, due to the use of terminal commands and the like, although for exactly that reason, developers have been incorporating Whisper into their apps.

Why third-party apps use OpenAI’s Whisper, how they make a profit, and what they bring to the table

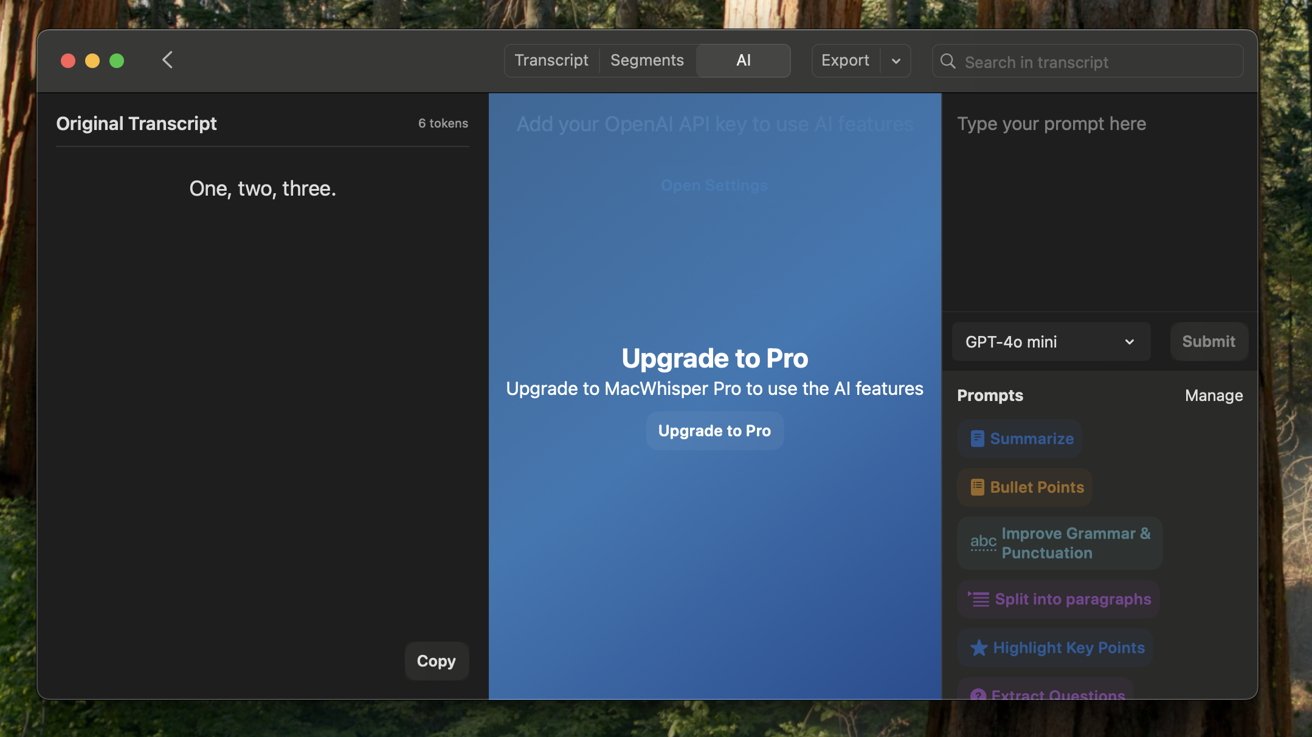

Many companies have developed GUI applications for macOS and iOS, which make use of OpenAI’s Whisper, as a way of creating a more user-friendly experience. This includes products such as MacWhisper and Whisper Transcription, and Whisper has even made its way into existing audio-related apps such as the $77 Audio Hijack.

Many third-party applications powered by OpenAI’s Whisper offer AI-powered text-editing tools

Many of these Whisper-powered apps offer basic transcription functionality for free, by providing access to smaller Whisper AI models. These models can provide quick transcriptions, but may not be as accurate as those created using the larger and more complex AI models.

In general, these types of apps make a profit by charging for the use of larger Whisper models within their respective GUI environments, or by adding additional functionality such as AI-powered summarization and draft creation.

Third-party transcription applications powered by OpenAI’s Whisper models can sometimes offer added functionality for the end-user. Instead of just transcribing audio, for instance, some third-party apps may also let users create drafts for blog posts, emails, and social media posts based on their transcript.

One drawback of these extra features, however, is that they often require an internet connection to function. For most Whisper-powered apps with text editing features, the additional transcript modification is performed by connecting to and using ChatGPT-4o, also developed by OpenAI.

On-device transcription apps based on OpenAI’s Whisper models

Many audio transcription applications based on Whisper charge customers for the use of larger Whisper AI models. Some apps also offer transcript editing and draft creation tools powered by OpenAi’s ChatGPT, but at an additional cost.

Whisper Transcription on macOS, for instance, requires a monthly subscription to use larger Whisper AI models, and to use ChatGPT-powered features. The app offers three subscription options:

- $4.99 for a weekly plan

- $8.99 for a monthly plan

- $24.99 for a one-year subscription

There’s also a lifetime purchase option that gives users indefinite access to all of the app’s features for a one-time fee of $59.99.

MacWhisper, another macOS audio transcription app, also requires payment for the use of larger Whisper AI models, and for ChatGPT integration. Users can buy a MacWhisper Pro license for a one-time payment of 39.99 euros (USD $44) for personal use. There’s also a 50% discount for journalists, though this requires sending an email to the developer — (email protected).

Business users, who need to run MacWhisper on more than one machine at a time, can purchase packages of 5, 10 and 20 MacWhisper Pro licenses. They can be bought at the following prices:

- 125 euros (USD $138) for 5 MacWhisper Pro licenses

- 200 euros (USD $221) for 10 MacWhisper Pro licenses

- 300 euros (USD $331) for 20 MacWhisper Pro licenses

True enthusiasts, however, can always install the free CLI (command-line interface) version of Whisper from OpenAI’s GitHub, which gives them access to the aforementioned larger AI models.

In short, apps such as MacWhisper and Whisper Transcription offer a more accessible way of using OpenAI’s Whisper, and in some cases offer added AI-powered functionality. This is what makes them appealing to users.

Cloud-based transcription apps currently on the market

Many on-device transcription tools and apps powered by Whisper do not feature real-time transcription, and are, instead, only compatible with audio recordings. This is where certain cloud-based apps and services become useful, as they can transcribe events in real time.

For cloud-based audio transcription apps, users have a variety of apps to choose from. Similar to transcription apps that use on-device processing, such as those based on OpenAI’s Whisper, there are different subscription options available for cloud based-apps. Some services offer hourly rates as well.

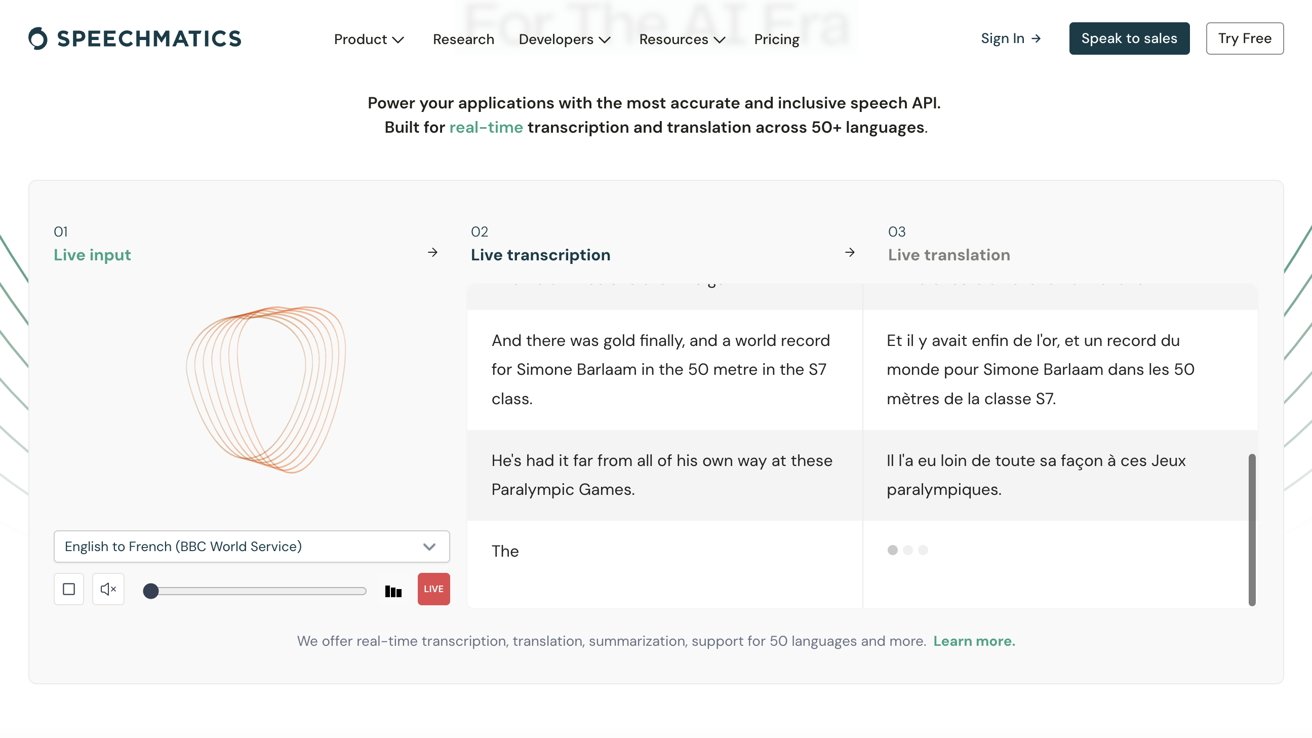

The Speechmatics website features a live demo of real-time audio transcription

Services such as Otter.ai provide a real-time transcript that can be viewed right as an event is happening. Otter can even time-stamp recordings and identify individual speakers, making it a good option for business applications.

The free version of Otter lets users transcribe 300 minutes per month, at 30 minutes per recording. For paying customers, the company offers two monthly subscription options:

- $8.33 for 1200 monthly transcription minutes, 90 minutes per conversation

- $20 for 6000 monthly transcription minutes, 4 hours per convesation

Offering similar functionality to Otter.ai, Zoom also has its own virtual meeting transcription service, though it is only available with a Pro ($14.99 per month,) Business ($21.99 per month,) or Enterprise license. It also requires that cloud recording be enabled for Zoom.

Speechmatics is another cloud-based, AI-powered audio transcription service that provides results in real-time. The front page of the company’s website even has a demo of this feature, which transcribes audio from BBC live broadcasts.

The free version of Speechmatics lets users transcribe 8 hours of audio per month. For paying customers, the Speechmatics website contains multiple hourly rates for the company’s audio transcription services.

The company offers varying levels of audio transcription accuracy for both real-time audio transcription as well as the processing of audio recordings.

For pre-recorded audio, the rates are:

- $0.30/hour for “Lite mode” transcription

- $0.80/hour for standard accuracy transcription

- $1.04/hour for enhanced accuracy transcription

To transcribe live audio, users will need to pay:

- $1.04/hour for standard accuracy transcription, or

- $1.65/hour for enhanced accuracy transcription

MAXQDA, which uses Speechmatics as a subprocessor, is a qualitative analysis program that lets users analyze different types of texts, literature, interviews and more. Among other features, the app offers audio transcription, assuming the user has purchased the software and has a MAXQDA AI Assist license.

The company charges per hour of transcribed audio. For private customers, MAXQDA’s rates are as follows:

- 23.80 euros (USD $26.27) for 2 hours worth of audio, transcribed

- 58.31 euros (USD $64.37) for 5 hours worth of audio, transcribed

- 92.82 euros (USD $102.47) for 10 hours worth of audio, transcribed

- 178.50 euros for (USD $197.05) for 20 hours worth of audio, transcribed

VoicePen is a note-taking app that offers cloud-based audio transcription, through OpenAI’s Whisper API or Whisper AI models deployed on servers. The app also contains AI-powered transcript-editing tools that only work online, similar to those offered by Whisper Transcription on the Mac.

The app offers subscription options that give users access to live transcription, AI rewrites via ChatGPT-4o, and more. Users can choose between:

- $4.99 for a weekly subscription

- $9.99 for a monthly subscription

- $44.99 for an annual subscription

Compared to audio transcription apps that process audio on-device, such as those powered by OpenAI’s Whisper AI models, cloud-based services often have serious drawbacks. Though they do have their advantages as well.

The advantages of Whisper’s on-device AI models compared to cloud-based processing

When used on-device, OpenAI’s Whisper models have multiple advantages relative to other transcription services. Whisper and its many app-type incarnations offer privacy-preserving on-device transcription at little or no cost while delivering acceptable levels of accuracy and performance.

OpenAI’s Whisper AI models can be found in transcription apps for macOS, such as Whisper Transcription

Unlike OpenAI’s Whisper, the free versions of cloud-based transcription services typically come with different restrictions and limitations in place. More often than not, these types of applications and websites place limits on the amount of audio a user can transcribe, the number of transcriptions one can perform, or they limit the maximum duration of audio files.

Pricing is another issue worth considering. Cloud-based transcription services have hourly rates or operate on a subscription-based model. This means that they charge per minute of transcribed audio or per transcription completed, while OpenAI’s Whisper is open-source and can be used by anyone at no cost.

Many companies that provide cloud-based transcription services see subscription-based models as an ideal way of generating profit over long periods of time. Some consumers would, arguably, rather pay a one-time fee or nothing at all.

OpenAI’s Whisper also has an advantage over cloud-based services in the number of languages it supports. Whisper supports 99 different languages, whereas Otter.ai, for example, only supports English.

Looming concerns of data privacy and security present another problem that plagues cloud-based transcription services. While many of these companies promise encrypted file transfers for audio recordings and claim that data is not shared with third parties, the end-user has no easy way of verifying these claims.

Unlike on-device applications, where the hardware can transcribe audio while disconnected from the internet, the effects of bad actors remain a possibility when it comes to cloud-based transcription services and apps.

The upsides to using cloud-based audio transcription services

Cloud-based transcription applications also have their own benefits, though. The most important among them being real-time audio transcription, cross-platform availability, and added app functionality compared to stand-alone on-device models.

-xl.jpg)

Otter.ai offers real-time audio transcription and is available via web browser. Image credit: Otter.ai

The fact that certain transcription services feature a web-based user interface means that they can be used on any device with a web browser. Ultimately, this makes them more convenient than an app limited to a singular platform such as macOS.

Transcription apps that utilize cloud-based processing can also save users’ storage space. By processing audio remotely, cloud-based transcription apps eliminate the requirement for storing AI models on device, saving the user up to 2GB, which is the size of larger a Whisper AI model.

Since cloud-based transcription apps process audio on servers, they are not as CPU-intensive as on-device models. Given that cloud-based apps require less power to transcribe audio, using them could lead to better battery life relative to the frequent use of on-device transcription models.

Ultimately, for the majority of transcription-related apps, it boils down to a trade-off between privacy and security, or real-time audio processing and cross-platform availability.

Apple’s implementation of audio transcription may impact the market in the long run, as the company’s iOS 18 Notes app features offline audio transcription that works in real time. In doing so, the software eliminates the need for a Wi-Fi connection while also ensuring the security of user data.

Apple’s approach to audio transcription in iOS 18

With all of this in mind, it’s no surprise that Apple decided to offer on-device audio transcription within core apps in iOS 18, iPadOS 18 and macOS Sequoia. The company has a tradition of insisting on privacy and security, especially when it comes to user data.

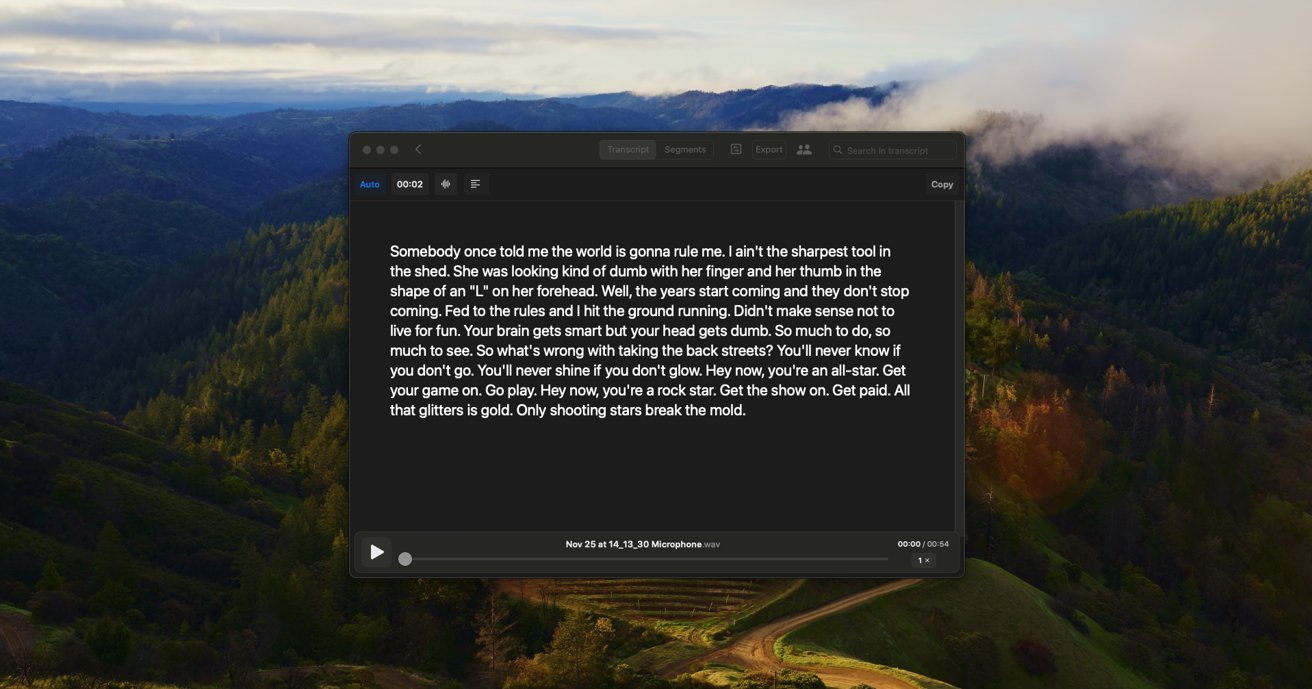

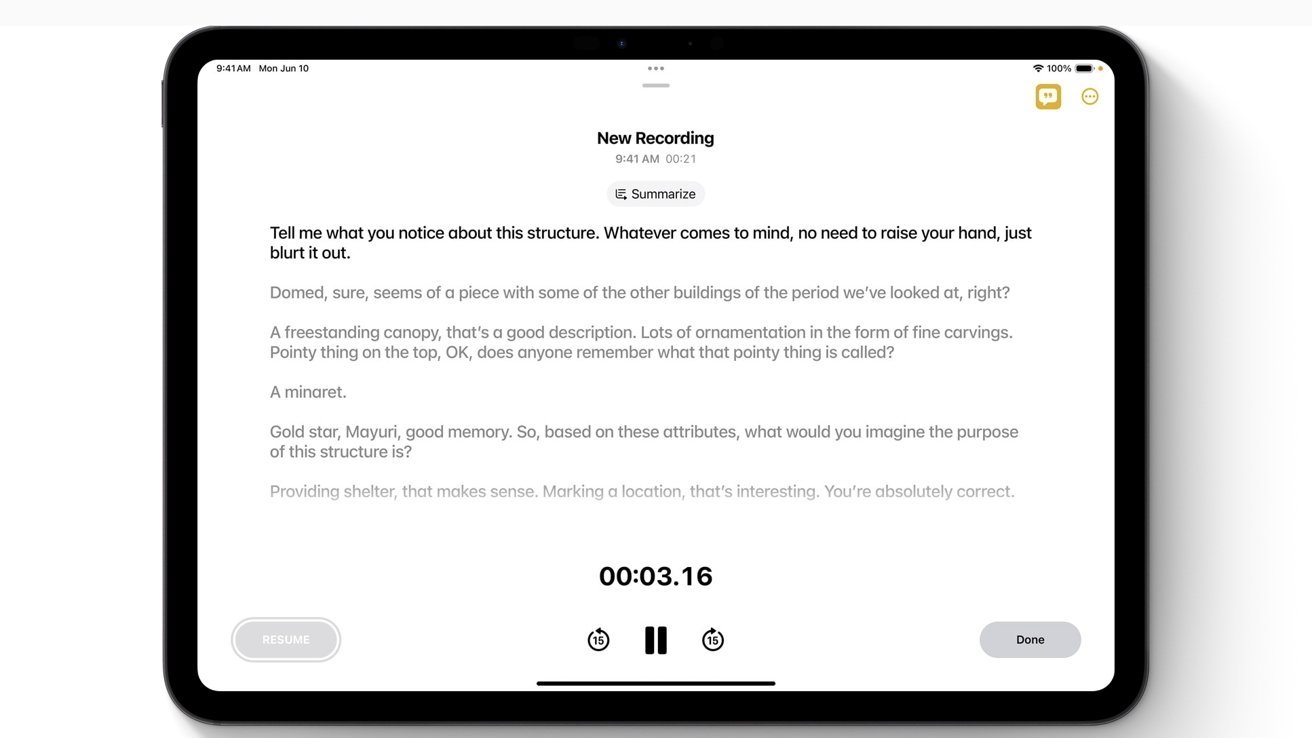

Apple’s iOS 18 and iPadOS 18 introduce support for real-time audio transcription in Notes

During Apple’s annual Worldwide Developers’ Conference (WWDC) on June 10, the company announced that on-device audio transcription would become available within three core apps — Notes, Phone, and Voice Memos.

Although Apple’s transcription features require an additional download from within the Notes app, real-time transcription is performed entirely on-device. This feature is already available in the current developer betas of the company’s newest operating systems — iOS 18, iPadOS 18, and macOS Sequoia.

While audio transcription was previously available in other applications like Podcasts, its addition to Notes, Voice Memos, and the Phone app allows for multiple new use cases. It also gives Apple a way of competing with existing third-party products and services that offer similar functionality.

Why Apple added audio transcription to Notes and Voice Memos

Rather than offering only audio transcription, Apple’s Notes app lets users embed audio recordings, images, links, text, and more – all within one note. This makes the app a true powerhouse for students and business professionals alike.

The new transcription functionality is present within the built-in Notes application, meaning that students could use it to record lectures and then supplement those recordings with whiteboard images or additional text, for example.

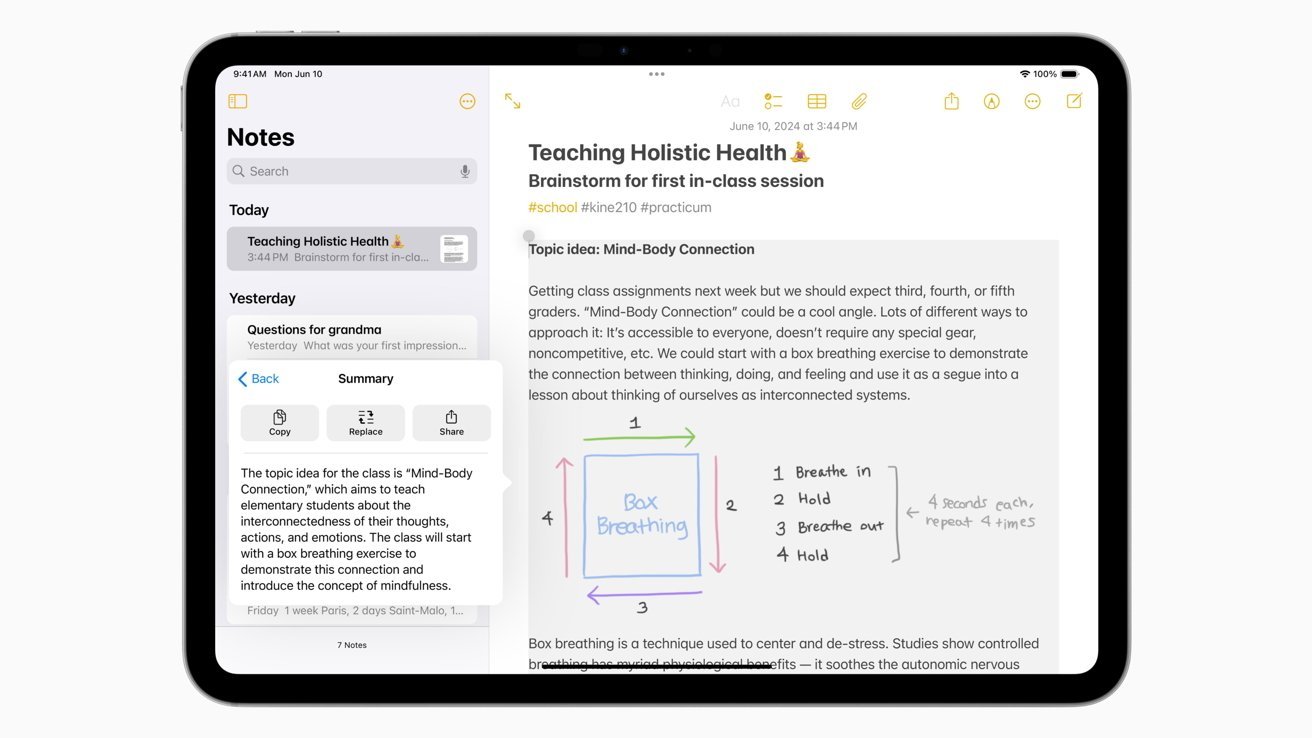

With Apple Intelligence, users can then create a summary of their transcribed audio, edit the text through Writing Tools, and will soon be able to add AI-generated images related to their text.

By adding features such as these, Apple wants to rival existing third-party note-taking and transcription apps, while also tackling the ever-increasing competition in the realm of AI through Apple Intelligence.

The potential effects of iOS 18 on the third-party transcription app market

As iOS 18 is still in beta at the time of writing, on-device audio transcription within Notes and Voice Memos is still not available on most users’ devices. This makes it somewhat difficult to assess the impact Apple’s features may have on the transcription app market.

Apple Intelligence lets users summarize their transcribed audio, and edit text through Writing Tools, but only on newer Apple devices

Nonetheless, developers of third-party transcription apps, such as VoicePen’s Timur Khairullin, remain confident. Khairullin told AppleInsider that he sees Apple’s transcription features as a positive development, saying that “Apple’s iOS 18 update will only expand the market.”

“It introduces new behaviors to users, which leads to greater adoption over time — something Apple excels at. At the same time, there’s always a market for apps that cater to users who want to go one step further,” Khairullin said.

The VoicePen developer claims that the value of third-party transcription applications is in their added functionality. Third-party apps often combine audio transcription with AI-powered text editing and draft creation tools, support for multiple audio formats, along with features created with specific markets in mind.

While Apple offers on-device audio transcription in iOS 18 as a stand-alone feature, tools for editing and summarizing those transcripts are powered by Apple Intelligence. This means that AI features such as Writing Tools and text summarization are only available on the latest iPhone 15 Pro and iPhone 15 Pro Max, or iPads and Macs with an M1 or newer chip.

As an alternative, cloud-based transcription apps offer ChatGPT-powered features. This means that users of older devices can still edit their transcripts, make drafts for blog posts, emails, and social media posts, even though their hardware doesn’t support Apple Intelligence.

In a conversation with AppleInsider, the VoicePen developer argued that transcription applications often target different markets and use cases. Khairullin claims that Otter.ai, for example, primarily focuses on transcribing live events such as meetings, rather than speech-to-text note-taking, as is the case with VoicePen.

Apple’s on-device audio transcription features, coupled together with Apple Intelligence, pack a serious punch, but not enough to truly rival or endanger the third-party transcription app market. Both cloud-based and offline transcription services are likely to maintain their current foothold, by offering a broader range of features or by supporting older devices.