Recent advances in AI ultimately come down to one thing: scale.

At the beginning of this decade, AI research labs realized that if you make algorithms (or models) bigger and bigger and feed them more and more data, you can make huge improvements in what they can do and how well they can do it. The latest AI models have hundreds of billions to trillions of internal network connections, and by consuming a healthy portion of the internet, they learn to write or code just like we do.

Training bigger algorithms requires more computing power, so computing dedicated to AI training has quadrupled every year to get to that point, according to Epoch AI, a nonprofit AI research organization.

If this growth continues through 2030, future AI models will be trained with 10,000 times more computing power than today’s state-of-the-art algorithms, such as OpenAI’s GPT-4.

Epoch detailed how possible this scenario is in a recent research paper, writing that “if pursued, we could see AI advances as dramatic in the next decade as the gap between GPT-2’s basic text generation in 2019 and GPT-4’s sophisticated problem-solving ability in 2023.”

But modern AI already sucks up significant amounts of power, tens of thousands of advanced chips, and trillions of online examples. Meanwhile, the industry has endured chip shortages, and studies suggest that it may be running out of good training data. Assuming companies continue to invest in AI scaling, is this pace of growth technically possible?

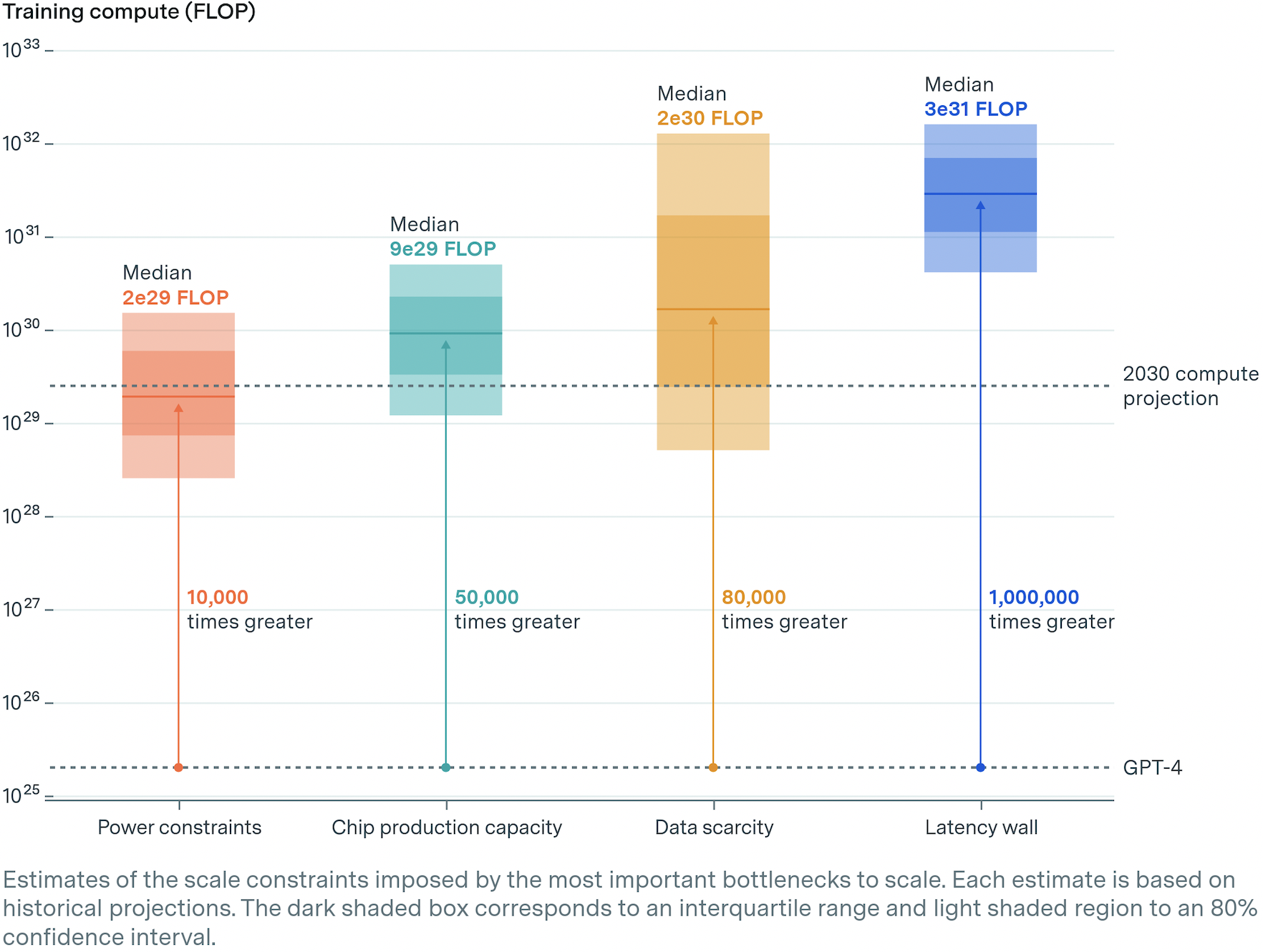

Epoch looked at the four biggest constraints to AI scaling in its report: power, chips, data, and latency. Summary: It’s technically possible to sustain growth, but it’s not a sure thing. Here’s why.

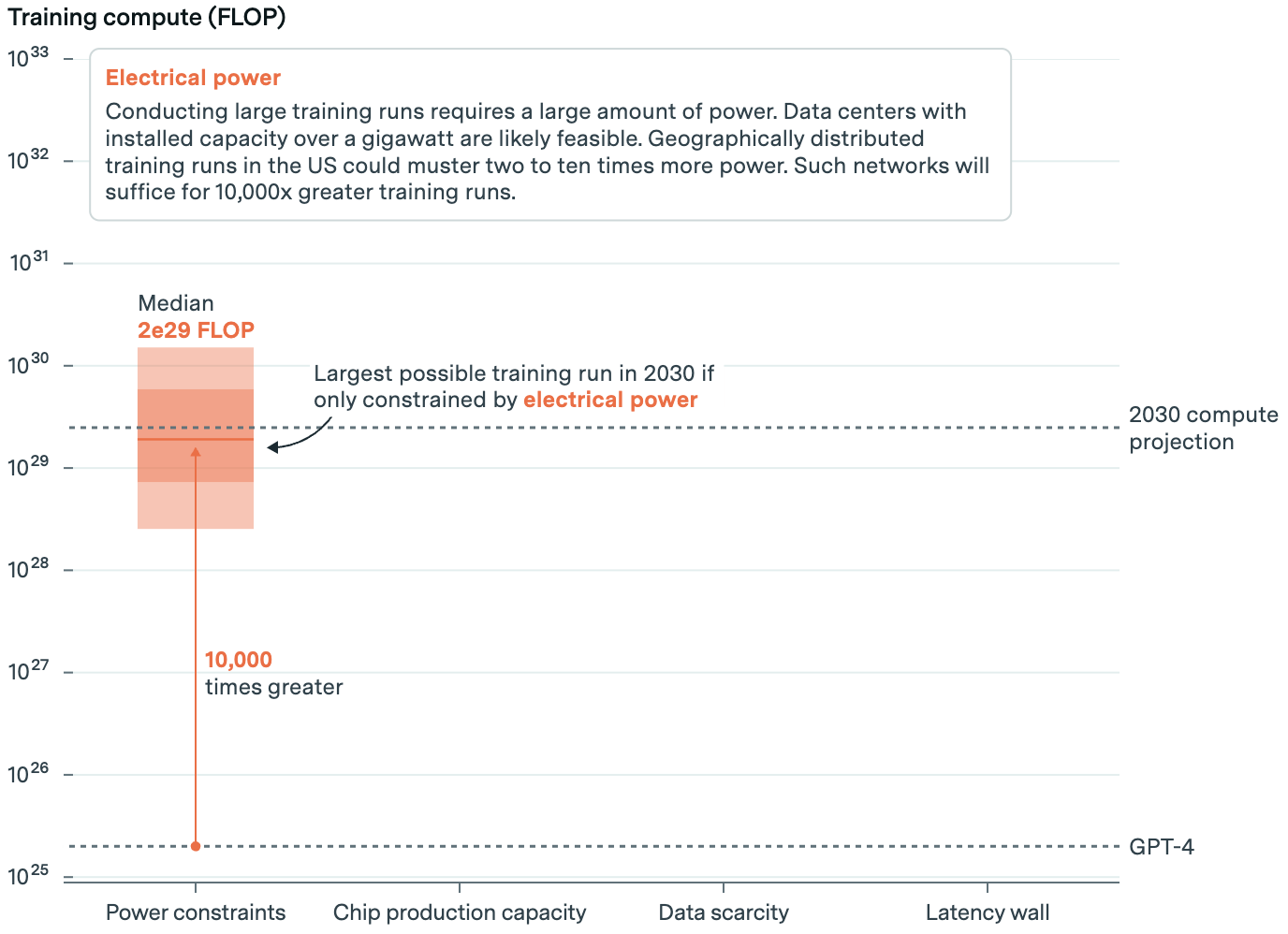

Power: It’s going to take a lot

Power is the biggest constraint to AI scaling. Warehouses and data centers filled with advanced chips and the equipment that powers them consume a lot of power. Meta’s latest frontier model was trained on 16,000 of Nvidia’s most powerful chips, which consume 27 megawatts of electricity.

According to Epoch, that’s the equivalent of the annual power consumption of 23,000 U.S. households. But even with efficiency gains, training frontier AI models in 2030 will require 200 times more power—about 6 gigawatts. That’s 30 percent of the power consumed by all data centers today.

Few power plants can draw that much power, and most are likely to have long-term contracts. But that assumes that one power plant supplies electricity to the data center. Epoch suggests that companies will look for areas where they can draw power from multiple power plants via regional grids. Given the planned growth of utilities, this route is tight, but possible.

To better address bottlenecks, companies can instead distribute training across multiple data centers, where training data batches are distributed across geographically separate data centers to reduce the power requirements of any one location. This strategy requires lightning-fast, high-bandwidth fiber connections, but it is technically feasible, and Google Gemini Ultra’s training runs are an early example.

Overall, Epochs offer a range of possibilities, from 1 gigawatt (local power) to 45 gigawatts (distributed power). The more power companies can utilize, the larger the model can be trained. Given power constraints, the model can be trained using about 10,000 times more computing power than GPT-4.

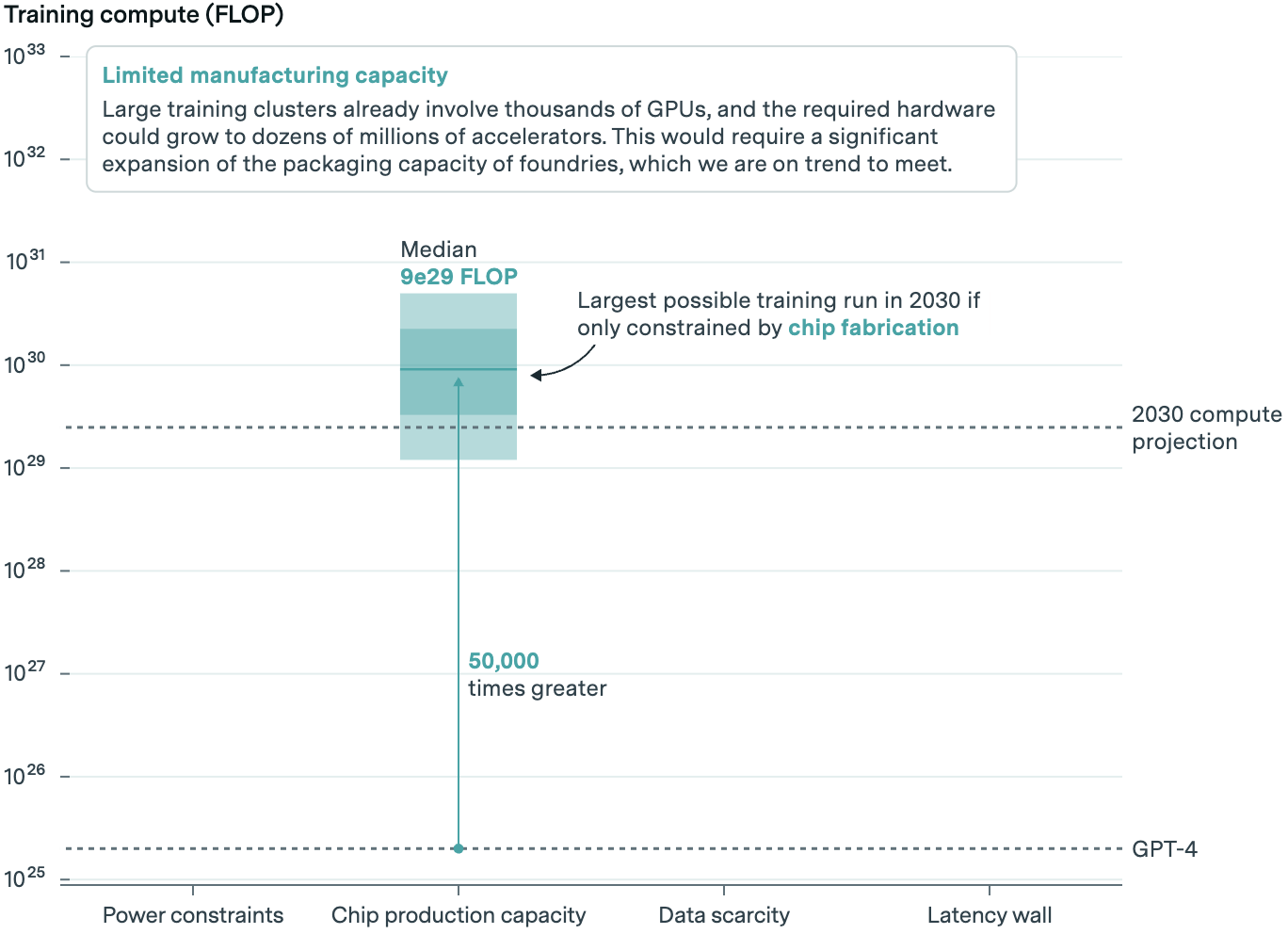

Chip: Is it possible to calculate?

All that power goes into running AI chips, some of which deliver finished AI models to customers, and some of which train the next model. Epoch took a closer look at the latter.

AI labs use graphics processing units, or GPUs, to train new models, and Nvidia is a leader in GPUs. TSMC manufactures these chips, sandwiching them with high-bandwidth memory. Forecasts must take all three steps into account. According to Epoch, there is likely to be spare capacity in GPU production, but memory and packaging could be hurdles.

With the expected industrial growth in production capacity, we expect that 20 to 400 million AI chips will be used for AI training by 2030. Some of these will be off-the-shelf models, and AI research labs will only be able to purchase a fraction of the total.

The large range indicates that there is significant uncertainty in the model. However, given the expected chip capacities, they believe that the model can be trained with about 50,000 times more computing power than GPT-4.

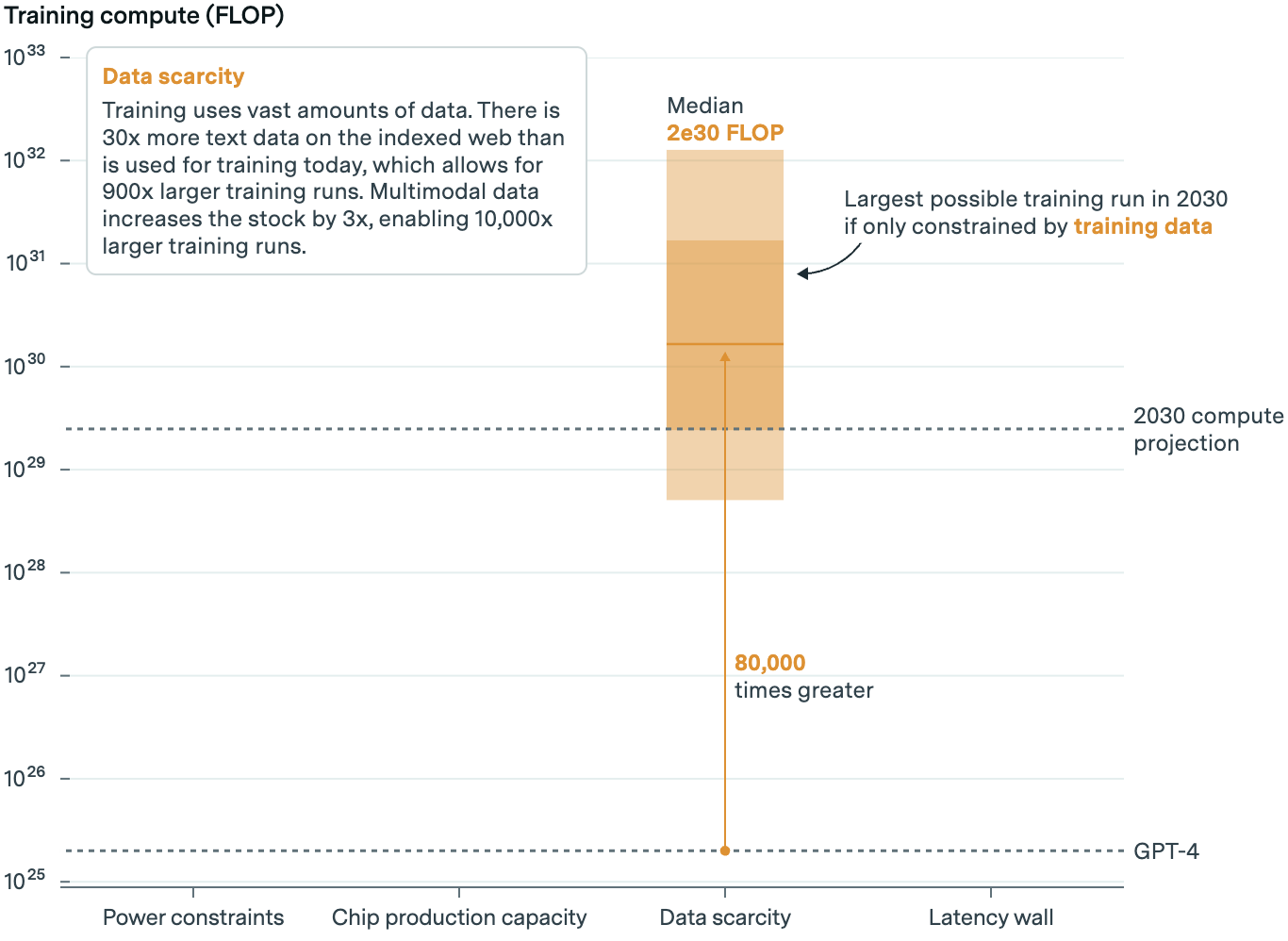

Data: AI Online Education

AI’s thirst for data and its impending scarcity are well-known constraints. Some predict that high-quality public data streams will run out by 2026. However, Epoch does not believe that data scarcity will inhibit model growth until at least 2030.

At today’s rates, they write, AI labs will run out of good text data in five years. Copyright lawsuits could also affect supply, which Epoch believes adds uncertainty to the model. But even if the courts rule in favor of copyright holders, the complexity of enforcement and licensing deals like those pursued by Vox Media, Time, The Atlantic, and others will likely limit the impact on supply (though the quality of the sources may be poorer).

But crucially, models now use more than just text for training: Google’s Gemini, for example, is trained on image, audio, and video data.

Non-text data can be added to the text data feed through captions and transcripts. It can also extend the capabilities of the model, such as recognizing food in a refrigerator image and suggesting dinner. More speculatively, it can also lead to transfer learning where models trained on multiple data types outperform models trained on just one.

According to Epoch, there is also evidence that synthetic data could further increase the amount of data collected, but it is unclear how much. DeepMind has long used synthetic data in its reinforcement learning algorithms, and Meta has used some synthetic data to train its latest AI models. However, there may be hard limits to how much can be used without degrading model quality. It would also require much more expensive computing power to generate.

However, Epoch estimates that combining text, non-text, and synthetic data would be enough to train an AI model with 80,000x more computing power than GPT-4.

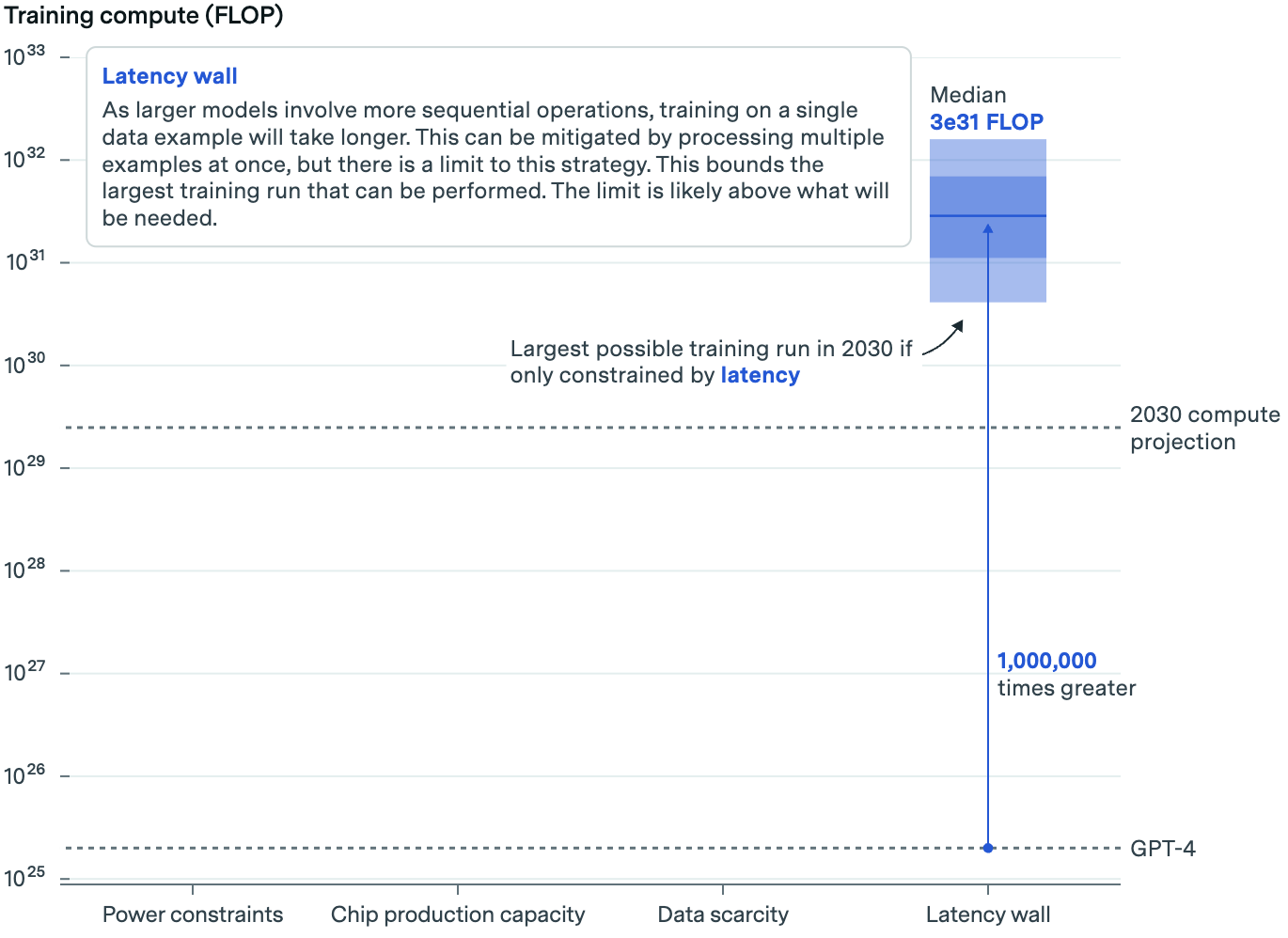

Delay: The bigger the slower

The last limitation has to do with the enormous size of the upcoming algorithms. The larger the algorithm, the longer it takes for the data to pass through the artificial neural network. This means that the time it takes to train a new algorithm can become impractical.

This part is technical. In simple terms, Epoch looks at the potential size of the future model, the size of the training data batches being processed in parallel, and the time it takes for the data to be processed within and between servers in an AI data center. This allows us to estimate how long it will take to train a model of a certain size.

Key takeaway: Training AI models with today’s setup ~ will do Eventually, we will reach the limit. But not for a while. Epoch estimates that it is possible to train an AI model with 1,000,000 times more computing power than GPT-4 using current practices.

Expanded 10,000 times

You may have noticed that the size of the AI model that is possible under each constraint increases. That is, the upper limit is higher for chips than for power, and for data than for chips. However, when you take all of this into account, the model can only be scaled up to the first bottleneck, which is power in this case. Even so, significant scaling is technically possible.

“Taking these AI bottlenecks into account, we could see training runs of up to 2e29 FLOPs within 10 years,” Epoch wrote.

“This represents a roughly 10,000-fold expansion over current models, and suggests that historical expansion trends could continue uninterrupted through 2030.”

What have you done for me lately?

All of this suggests that continued scaling is technically feasible, but it also assumes a fundamental assumption: that AI investment will grow as needed to fund scaling, and that scaling will continue to deliver impressive and, more importantly, useful advances.

For now, there’s every indication that tech companies will continue to invest cash at historic levels, with spending on new equipment and real estate already at multi-year highs, fueled by AI.

“When you go through a curve like this, the risk of underinvesting is much greater than the risk of overinvesting,” Alphabet CEO Sundar Pichai explained during last quarter’s earnings call.

But the spending will have to be much higher. Anthropic CEO Dario Amodei estimates that a trained model today could cost up to $1 billion, next year’s model could cost closer to $10 billion, and after that, the cost per model could reach $100 billion. It’s a dizzying number, but one that companies are willing to pay. Microsoft is already said to have invested that much in its Stargate AI supercomputer, a joint project with OpenAI that is set to launch in 2028.

It goes without saying that the willingness to invest billions or even hundreds of billions of dollars (more than the GDP of many countries and a significant portion of the current annual revenues of the biggest companies in the tech sector) is not guaranteed. As the light fades, whether AI growth will continue may come down to the question, “What have you done for me lately?”

Investors are already seeing the end result. Today, the investment amount is much larger than the return. To justify the higher spending, companies need to show that the expansion continues to produce increasingly capable AI models. That means there is increasing pressure to go beyond incremental improvements in future models. If profits are shrinking or not enough people are willing to pay for AI products, the story could change.

Also, some critics believe that large-scale language and multimodal models will turn out to be expensive dead ends. And there is always the possibility that breakthroughs like the one that started this round will show that we can achieve more with less. Our brains are constantly learning with the energy value of a light bulb, but not with the data value of the Internet.

Still, if current approaches can “automate a significant portion of economic work,” the financial returns could be in the trillions of dollars, which would more than justify the spending, according to Epoch. Many in the industry are willing to take that bet. No one knows how it will turn out yet.

Image credit: Werclive 👹 / Unsplash