On Tuesday, Elon Musk’s AI company xAI announced the beta release of two new language models, Grok-2 and Grok-2 mini, available to subscribers of his social media platform X (formerly Twitter). The models also tie into the recently released Flux image synthesis model, which will allow X users to create nearly censored, photorealistic images that they can share on the site.

“Flux, accessible via Grok, is a great text-to-image generator, but it’s also really great at creating fake photos of real locations and people and sending them straight to Twitter,” frequent AI commentator Ethan Mollick wrote on X. “Does anyone know if anyone watermarks this? That would be a good idea.”

In a report published earlier today, The Verge noted that Grok’s image-generating feature appears to have minimal safeguards in place, allowing users to create potentially controversial content. In tests, Grok generated images depicting political figures in dangerous situations, copyrighted characters, and scenes of violence when requested.

The Verge claims that Grok has certain restrictions, such as avoiding pornography or excessively violent content, but found that these rules seem inconsistent in practice. Unlike other major AI image generators, Grok doesn’t appear to reject prompts that include real people or add identifying watermarks to its output.

Given what people have created so far (including images of Donald Trump and Kamala Harris kissing and giving thumbs ups on their way to the Twin Towers in what appears to be a 9/11 attack), unfettered output may not last long. But since Elon Musk has made a big deal about “free speech” on his platform, the feature may be here to stay (until someone sue for libel or copyright).

The shocking use of Grok’s image generator raises an age-old question about AI at this point: Should the misuse of an AI image generator be the responsibility of the person who created the prompt, the organization that created the AI model, or the platform that hosts the image? There is no clear consensus so far, and the situation is not yet legally resolved, but a new proposed US law called the NO FAKES Act would probably argue that X should be held liable for creating a realistic image deepfake.

Using Grok-2 still preserves the limitations of GPT-4.

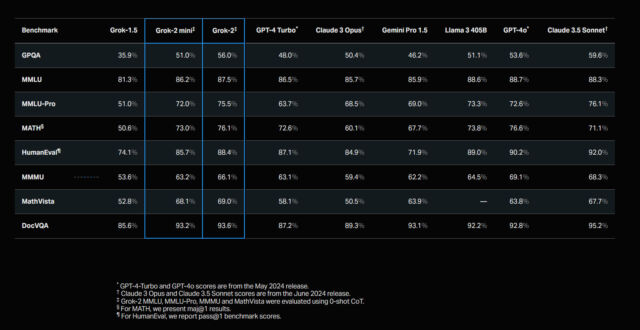

Beyond the images, xAI claims on its release blog that Grok-2 and Grok-2 mini represent significant advancements in functionality, with Grok-2 reportedly outpacing some of its major competitors in recent benchmarks and what we call “vibemarks.” While it’s always wise to be skeptical of such claims, the “GPT-4 class” of AI language models with similar functionality to OpenAI’s model appears to have grown, but the GPT-4 barrier has yet to be broken.

“There are currently five GPT-4 class models: GPT-4o, Claude 3.5, Gemini 1.5, Llama 3.1, and now Grok 2,” Ethan Mollick wrote on X. “Everyone in the lab keeps saying there’s still huge room for improvement, but we haven’t yet seen a model that truly surpasses GPT-4.”

xAI recently announced that they had released an early version of Grok-2 under the name “sus-column-r” on LMSYS Chatbot Arena, which they say achieved higher overall Elo scores than models like Claude 3.5 Sonnet and GPT-4 Turbo. Chatbot Arena is a popular subjective vibemarking website for AI models, but has recently become the subject of controversy after people disagreed with the high ranking of OpenAI’s GPT-4o mini on the list.

According to xAI, both new Grok models showed improvements over their predecessor, Grok-1.5, in graduate-level scientific knowledge, general knowledge, and mathematical problem solving, benchmarks that proved similarly controversial. The company also highlighted Grok-2’s visual task performance, claiming it showed state-of-the-art results in visual math reasoning and document-based question answering.

This model is now available to X Premium and Premium+ subscribers via an updated app interface. Unlike some competitors in the open weights space, xAI does not make its model weights available for download or independent validation. This closed approach stands in stark contrast to recent moves by Meta, which recently released its Llama 3.1 405B model for anyone to download and run locally.

xAI plans to release both models via an enterprise API later this month. The company says the API will feature multi-region deployment options and security measures such as mandatory multi-factor authentication. Details on pricing, usage limits, or data handling policies have not yet been announced.

Realistic image generation aside, perhaps the biggest drawback of Grok-2 is its deep link to X. This makes it prone to pulling inaccurate information from tweets. It’s like having a friend who insists on checking social media sites before answering even questions that aren’t particularly relevant.

As Mollick points out in X, this tight coupling can be annoying. “Right now I only have the Grok 2 mini, which seems like a solid model, but the RAG connection to Twitter often seems to work poorly,” he writes. “The model is fed Twitter results that seem unrelated to the prompt, and then desperately tries to stitch them together into something coherent.”